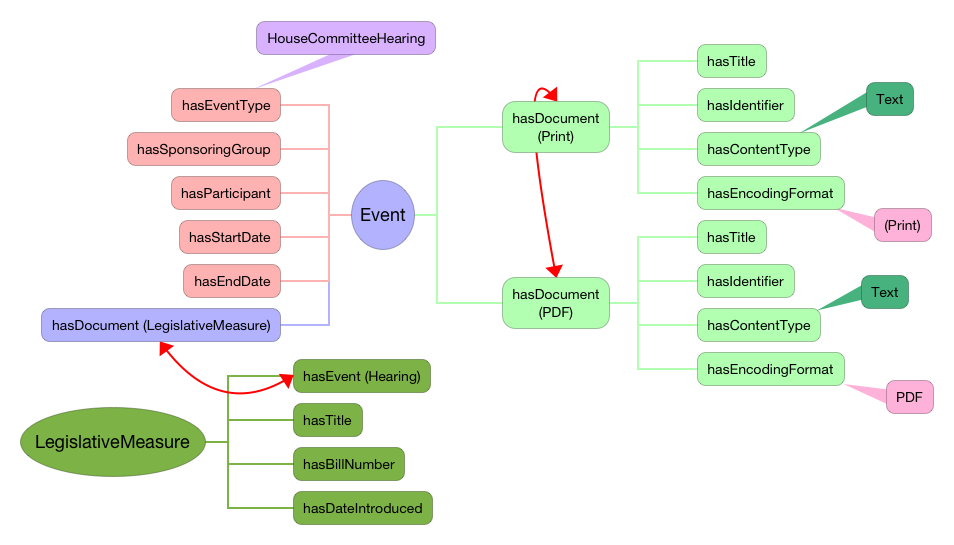

[Editor’s note: this post was co-authored by Tom Bruce, John Joergensen, Diane Hillmann, and Jon Phipps. References to the “model” here refer to the LII data model for legislative information that is described and published elsewhere. ]

This post lays out some design criteria for metadata that apply to compilations of enacted legislation, and to the tools commonly used to conduct research with them. Large corpora discussed here include Public Laws, the Statutes at Large, and the United States Code. This “post-passage” category also takes in signing statements, and — perhaps a surprise to some — a variety of finding aids. Finding aids receive particular attention because

- they are critically important to researchers and to the public;

- they are largely either paper-based, or electronic transcriptions of paper-based aids. They provide an interesting illustration of a major design question: whether legacy data models should simply be re-cast in new technology, or rethought completely. Our conclusion is that legacy models (especially those designed for consumption by humans) typically embody reductive design decisions that should be rethought.

- they illustrate particular problems with identifiers. In particular, confusion between volume/page-number citations as identifiers for a whole entity, versus their use as references to a particular page milestone, is a problem. So is alignment with labels or containers that identify granular, structural units like sections or provisions, because such units can occur multiple times within a single page.

Let’s begin with a discussion of signing statements, which might be considered the “first stop” after legislation is passed.

Overarching issues

Existing metadata

Signing statements have been used by many presidents over the years as a way to record their position on new legislation. For most of our history, their use has been rare and noncontroversial. However, during the George W. Bush administration they were used to declare legal positions on the constitutionality of sections of laws being signed.

Since they had never previously been controversial, there had been little interest in collecting or indexing these documents in any systematic manner. With the change in their use, this attitude has changed, and there is a need to easily and quickly locate these documents, particularly within the context of the legislation to which they are linked.

Currently, Presidential signing statements are collected as part of the Weekly Compilation of Presidential Documents and Daily Compilation of Presidential Documents. These are collected and issued by the White House press secretary, and published by the Office of the Federal Register. As they are not technically required by law to be published, they do not appear in the Federal Register or in Title 3 of the Code of Federal Regulations.

Although they appear in the daily and weekly compilations, they are not marked or categorized in any particular manner. In FD/SYS, the included MODS files includes a subject topic “bill signings”, marking it as related to that category of event. “Bill Signings” is also included in the MODS <category1> tag that exists in presidential documents. That designation, however, also will be used for remarks as well as formal signing statements. In addition, it is unclear whether that designation has been used with any consistency. The MODS files for signing statements include no information designating the document as a signing statement, but only as a “PRESDOCU”. The MODS files do, however, have references to the public law to which they refer. They will also have a publication date that will match with the date on which the president signed the subject law.

In order to make signing statements findable, the existing links to relevant legislation which are already represented in the GPO MODS files should be built into the model, along with the publication date information, and designation of the president who is issuing the statement. In addition to that, however, the categorization of a signing statement as a signing statement needs to be added in the same fashion in which we have categorized other documents, and implemented with consistency. If the implementation and study of signing statements continues as an important area of user inquiry, they will need to be identifiable.

Finally, as with all such documents, there always a desire to assist the researcher and the public by including evaluation aids. It is tempting, for example, to indicate whether a statement includes a challenge to the constitutionality or enforceability of a law. We believe, however, that it would be a mistake to build this into the model. If interpretive aids of this kind are themselves properly linked to their related legislation, they will be easily found.

We have singled out signing statements because they appeared prominently among use cases we have collected, and in other conversations about the “post-passage” corpora. In reality, many other presidential documents relate closely to legislative materials before and after passage. We will consider them in later sections of this document as we encounter them in finding aids.

Forms of enacted Federal legislation

Enacted Federal legislation is published by many groups in many formats, including (among versions published by the legislative branch) Public Laws, the Statutes at Large, and the United States Code. Privately published editions of the US Code are also common (and indeed prevalent), either in electronic or printed form, and it is likely that their use exceeds that of the officially published versions.

Overarching issues

How do post-passage materials relate to existing systems such as THOMAS, congress.gov, or GovTrack?

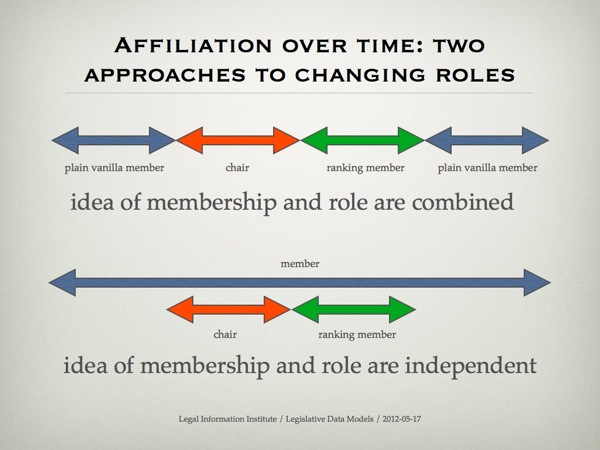

First, as to necessity: research needs have no respect for administrative boundaries or data stovepipes. Many researchers will wish to trace the history of a law from the introduction of a bill through to its final resting place in the US Code. As to means, our model incorporates a series of properties that describe the codification of particular legislative measures (or provisions); they might be applied at the whole-document or subdocument level. That essentially replicates what is found in Tables I, II and III as we describe them below. This area of the model might, however, require extension in light of more detailed information about the codification process itself. We are aware, for example, that current finding aids and the data in them make it far easier to find out what happened to a particular provision in a bill (forward tracing) than it is to find out where a particular provision in the US Code came from (reverse tracing), and that the finding aids do not support all common use cases with certainty.

Updating

Virtually every document we have encountered in our survey of legislative corpora becomes “frozen” at some point, either by being finalized, or by being captured as a series of sequential snapshots. That is not the case with the US Code, which is continually revised as new legislation is passed. This creates a series of updating problems that involve not only modeling the current state of the Code, but also:

-

tracking new codification decisions

-

tracking changes in the state of material that has been changed, moved, or repealed,

-

revising and archiving metadata that has been changed or rendered irrelevant by changes in the underlying material

and so on.

It seems likely to us that there are both engineering and policy decisions involved here. Any legislative data model needs to have hooks that allow connection to more detailed models, maintained by others, that track codification decisions. Most use cases that look at statutes and ask, “what happened to that statute?” or “where did this come from?” will need those features. The policy question simply involves deciding whether and how to connect to data developed by others (for example, if it were desirable to trace legislation from congress.gov into the US Code). As to engineering, it may be simpler in the short run to simply model the finding aids that currently assist users in coping with the print-based stovepipes involved. That has drawbacks that we describe in some detail later on, but has the advantage of being relatively simple to do at the level of functionality that the print-based aids currently provide.

Whatever approach is taken, maintenance will be an issue; most automated approaches will require the direct acceptance of data originated by others. The Office of the Law Revision Counsel is building a system to track not only codified legislative text but to record the decisions taken. Linking to such a system would extend, at low cost, the capabilities of existing systems in very useful ways, but it is not clear whether OLRC will expose any of this tracking metadata for public use.

Identifiers and identifier granularity

Bills become Public Laws. Often, they are then chopped into small bits and sprayed over the US Code. Even the most coherent bill — and many fall far short of that mark — is a bundle of provisions that are related by common concern with a public policy issue (eg. an “antitrust law”) or by their relationship to a particular constituency (eg. a “farm bill”). The individual provisions might most properly relate to very different portions of the US Code; a farm bill could contain provisions related to income tax, land use, environmental regulation, and so on; many will amend existing provisions in the Code. Mapping and recording of the codification decisions involved is thus a major concern for modelers.

The extreme granularity of the changes involved can be seen (eg.) in the Note to 26 USC 1, which contains literally hundreds of entries like the following:

2004—Subsec. (f)(8). Pub. L. 108–311, §§ 101(c), 105, temporarily amended par. (8) generally, substituting provisions relating to elimination of marriage penalty in 15-percent bracket for provisions relating to phaseout of marriage penalty in 15-percent bracket. See Effective and Termination Dates of 2004 Amendments note below.

For our purposes here it is the mapping of the Public Law subsection to a named paragraph in the codified statute that is interesting. It proclaims the need for identifiers at a very fine-grained level. The XML standard used by the House and Senate for legislation contains mechanisms for markup and identification down to the so-called “subitem” level, which is the lowest level of named container in bills and resolutions (the text in our example is actually at the “subsection” level of the Act). It seems to us unlikely that mapping is consistently between particular levels of the substructure (that is, it seems unlikely that sublevel X in the Public Law always maps to something at sublevel Y of the US Code). Sanity checking, then, will be difficult.

US Code identifiers

Identifiers within the US Code provide some interestingly dysfunctional examples. They can usefully be thought of as having three basic types: “section” identifiers, which (sensibly) identify sections, “subsection” identifiers, which apply to named chunks within a section, and “supersection” identifiers, which identify aggregations of materials above the section level but below the level of the Title: subtitles, parts, subparts, chapters, and subchapters.

Official citation takes no notice of supersection identifiers, but many topical references in other materials do. Chapters should get particular attention, because they are often containers for the codified version of an entire Act. Supersection identifiers are confusing and problematic when considered across the entire Code, because identical levels are labelled differently from Title to Title. For example, in most, the “Part” level occurs above “Chapter” in the hierarchy, and in some, that order is reversed. It should also be noted that practically any supersection — no matter how many other levels may exist beneath it in the hierarchy — can have a section as its direct descendant. There are also “anonymous” supersections that are implied by the existence of table-of-contents subheadings that have no official name; these appear in various places in the Code.

To our way of thinking, this suggests that the use of opaque identifiers for the intermediate supersections is the best approach for unique identification. Path-based accessors that use level-labels such as “subtitle” and “section” are obviously useful, too, however confusing they might seem when accessors from different titles with different labelling hierarchies are compared side by side.

As to section identifiers, the main problem is that years of accumulated insertions have resulted in an identifier system that appears far from rational. For example, “1749bbb-10c” is a valid section number in Title 12. It may nevertheless make sense to use citation as the basis for identifier construction rather than making the identifiers fully opaque. As to subsection labeling, it is pretty consistent throughout the Code, and can be thought of as an extension to the system of section identifiers.

Public Laws, Statutes at Large, and the United States Code

Existing metadata

Traditional library approaches to these complex sets of materials have been very simple: they’ve been cataloged as ‘serials’ (open ended, continuing publications), with very little detail. That allows libraries to represent the materials in their catalogs, and to provide a bibliographic record that acts as a hook for check-in data, and is used to track receipt and inventory of individual physical volumes. In the law library context, where few users access these basic resources through a catalog, this approach has been sufficient, efficient and low-maintenance.

However, as this information ‘goes digital’, that strategy breaks down in some predictable ways, many of which we’ve documented elsewhere in this project’s papers; the biggest is that much of the time we would like more detailed information about smaller granules than the “serial” approach contemplates. As we make a fuller transition to digital access of this information, these limited approaches no longer provide even minimal access to this critical material.

Finding aids

There are a good many finding aids that can be used to trace Federal legislation through the codification process, and to follow authority relationships between legislative- and executive-branch materials, such as presidential documents and the Code of Federal Regulations. All were originally designed for distribution in tabular form, at first on paper, and more recently on Web pages. In the new environment we imagine, the approach they represent is problematic. It may be nevertheless be worthwhile to model the finding aids themselves for use in the short term, as better implementations require significant analysis and administrative coordination.

General problems

Deficiencies of print representations

A look at the Parallel Table of Authorities [PTOA] shows where the problems are likely to be found. Like all other tabular finding aids that originate in print, it was designed for consumption by human experts capable of fairly sophisticated interpretation of its contents. It embeds a series of reductive design decisions that trade conciseness against the need for some “unpacking” by the reader. Conciseness is a virtue in print, but it is at best unnecessary and at worst confusing when the data is to be consumed and processed by machines. A couple of examples will illustrate:

-

Some PTOA entries map ranges of US Code sections against ranges of CFR Parts, in what appears to be a many-to-many relationship. It is unlikely that every pair that we could generate by simple combinatorial expansion represents a valid authority relationship. Indeed, as we shall see, the various finding aids differ considerably in the meaning they assign to a “range” of sections and in the treatment that they intend for them.

-

The table simply states that there is a relationship between each of the two cells in every row of the table, without saying what it is. The name of the table would lead the reader to believe that the relationship is one of authorization, but in fact other language around the table suggests that there are as many as four different types of relationship possible. These are not explicitly identified.

To model the finding aid, in this case, would be to perpetuate a less-than-accurate representation of the data. As a practical matter of software project planning and management, it might be worth doing so anyway, in order to more quickly provide users with a semi-automated, electronic version of something familiar and useful. But that is not the best we could do. Most of the finding aids associated with Federal statutes have similar re-modeling issues, and should be reconceived for the Semantic Web environment in order to achieve better results.

Identifier granularity and alignment

Most of the finding aids make use of granular references; in the case of Public Laws, these are often at the section level or below, and in the case of the US Code they are often to named subsections. The granularity of references may or may not be reflected in the granularity of the structural XML markup of any particular edition of those resources.

The Statutes at Large use a page-based citation system that creates two interesting modeling issues. First, on its own, a page-based citation is not a unique identifier for a statute in Stat. L., because more than one may appear on one page. Second, it was not ever thus. Stat. L. has used three different numbering schemes at various times, each containing ambiguities. These would be extraordinarily difficult to resolve under any circumstances, and particularly so given the demands of codification we describe later in the section on the Table III finding aid. Taking these two things together, it seems that there is no way to accurately create a pinpoint link between a provision of an Act in its Public Law format and a specific location in the Statutes at Large; the finest resolution possible is at page granularity.

It would thus seem that the most sensible approach would be to use a somewhat “loose and floppy” relationship like “isPublishedAt” to describe the relationship involved, since the information available from the Table does not really support pinpoint accuracy. That is unfortunate, in that there are important use cases that need such links. For example, statutes are frequently described in judicial opinions using citations that refer only to the Statutes at Large, sometimes because the case in question predates the US Code and no other reference can exist, and sometimes because the writer has omitted other citation. It is effectively impossible to construct a pinpoint link if the cite contains a subsection reference; one has to cite to the nearest page, relying on the reader to find the relevant statute on the page somewhere. It would be equally difficult to trace through a Stat.L. citation to the relevant provision of the US Code in situations where the USC citation has been omitted.

In short, identifiers in this part of the legislative jungle have two problems: first, they sometimes do not exist at a sufficiently granular resolution in the relevant XML versions, and second, granular identifiers do not resolve or map well to materials whose citation has traditionally been based on print volume and page numbers.

Identifiers for Presidential documents: general characteristics

Some of the finding aids we describe below provide mappings between Presidential documents and the codified statutes in the US Code. Identifiers for Presidential documents are assigned by the Office of the Federal Register, and are typically accession numbers. It is worth noting that OFR provides a number of finding aids and subject-matter descriptions of Presidential documents, though these are beyond our scope here.

As to GPO, it appears at first blush that the MODS metadata for the US Code as found in FD/SYS does not reflect associations with Executive Orders, although they are vaguely modeled in the MODS files associated with the Executive Orders themselves. There would be some virtue in being able to find information in both directions. That is especially true in situations where the state of the law cannot be fully understood without referring to both the Code and related Executive Orders simultaneously. For example, 4 USC 1, in its most current version, claims that there are 48 stars on the flag of the United States; it is only possible to find out where the other two came from by referencing the Executive Orders that accompanied statehood for Alaska and Hawai’i.

For the general public, the TOPN is probably the single most useful finding aid for Federal legislation. That is because it bridges the gap between popular accounts of legislation — for example, in the news media — and the codified collections of laws that are in effect. Where, exactly, do we find the Lily Ledbetter Fair Pay Act in the modern statute book? The answer to that question isn’t obvious.

Broadly — very broadly — there are two ways in which an Act may be codified. First, it could be moved into the Code wholesale, typically as a new Chapter containing numbered sections that reflect the section divisions in the Act. Second, it could be disassembled into a bag of provisions and scattered all over the Code, with each section placed in a region of the Code dictated by its subject matter. In such cases, the notes to the Code section that describes the “Short Title” of the Act generally contain a roadmap of what has been done with the rest of it. That also happens when the Act contains language that consists entirely of instructions for amending existing statutes already codified.

For example, the TOPN entry for the Lily Ledbetter Fair Pay Act looks like this:

Lilly Ledbetter Fair Pay Act of 2009

Pub. L. 111-2, Jan. 29, 2009, 123 Stat. 5

Short title, see 42 U.S.C. 2000a note

It maps the identifier for the Public Law version of the Act to the Statutes at Large, with a page reference to the Stat. page on which the Act begins. It also maps to the “Short Title” section of the USC, whose note contains information about what has been done with the Act.

Short Title of 2009 Amendment

Pub. L. 111–2, § 1,Jan. 29, 2009, 123 Stat. 5, provided that: “This Act [amending sections2000e–5 and 2000e–16 of this title and sections 626, 633a, and 794a of Title 29, Labor, and enacting provisions set out as notes under section 2000e–5 of this title] may be cited as the ‘Lilly Ledbetter Fair Pay Act of 2009’.”

This entry makes an important point about codified legislation. While it is natural to believe that codification consists of taking something that contains entirely new legislative language, breaking it into pieces, and plugging the pieces into the Code (or substituting them for old ones), that is not exactly what happens much of the time. Any Act could be, and often is, a laundry list of directives to amend existing codified statutes in some way or other. In such cases, the text of the Act is not incorporated into the Code itself, but into the Notes, in a manner similar to the example just given. That is a subtle difference, but an important one, as we shall see in the discussion of Table III below. It introduces an extra layer of mapping into the process, in a way that is partially obscured by the fact that inclusion is in the Notes rather than in the text of the Code. One result of this is that, in general, it is easier to look at a current provision and find out where it came from than it is to look at an historical provision and find out what happened to it.

From a data modeler’s perspective, the TOPN is useful but not necessary; the necessary finding aid can be constructed by aggregating data from other tables, or by simply referring to the short titles and popular names given in the text of the Act itself. The relationships modeled by TOPN aggregate information:

-

from the Acts or bills themselves (House and Senate identifiers for bills, and the name of the Act as it’s found in either the bill or (better) in the Public Law version);

-

from Table 3, which describes where the Public Law is codified; and

-

from Table 1, which models an extra “change of address” that is applied in cases where codified legislation has been reorganized for passage into positive law.

Table I describes the treatment of individual sections in Titles that have been revised for enactment as positive law. The Table is a straightforward mapping of “old” section numbers in a Title to “new” section numbers that apply after the Title was made into positive law. As such, Table I entries also have a temporal dimension — the mappings need only be applied when tracing a citation to the Code as it existed before the date of positive law enactment to a location in the Code after that date.

A relational-database expert obsessed with normalization would say that Table I is, then, really two tables — one that maps old sections to new sections within a Title, and a second, implied table that says whether or not each of the 51 Titles has been enacted into positive law, and if so, when. The researcher wanting to trace a particular reference would follow this heuristic:

-

Does my reference fall within a positive-law Title?

-

If so, does my reference precede the date of enactment into positive law?

-

If so, what is the number of the “new” section?

Thus, the model will need to reflect properties of the Title itself (“enactedAsPositiveLaw”) and of the mapping relationship of old to new (“hasPositiveLawSection”).

The United States Code was preceded by an earlier attempt at regularized organization, the Revised Statutes of 1878 . Citations to the Revised Statutes are to sequentially-numbered Sections, with “Rev.Stat.” as the series indicator. Table II provides a map between Rev. Stat. cites and sections of the US Code, along with a number of status indicators; the two most important (and common) of these indicate that a statute has been repealed, or that Table I needs to be applied because the classification shown was done prior to positive-law enactment of the Title.

Unlike other finding aids we describe, where the meaning of mappings between ranges and lists of things can be both combinatorial and ambiguous, Table II appears straightforward. A list or range of items in the Rev. Stat. columns can be mapped one-to-one to the corresponding list or range in the USC column. The first element in the list or range in Rev. Stat. maps to the first element in the list in USC, the second to the second, and so on. Simple reciprocal relationships should obtain.

That is particularly important in light of the relationship between Table II and Table III. In Table III, for all statutes passed before 1874, Table III references all refer to the Revised Statutes, and not to the US Code. So, for those statutes, in order to determine where they may still exist as part of the US Code, reference needs to be made first to Table III, to obtain the R.S. section where it was first encoded, and then to Table II, to determine where that R.S. section was re-encoded in the US Code. Without the straightforward, one-to-one relationship between the R.S. and US Code expressed in Table II, the connection between pre-1874 statutes and current US Code sections would not be possible.

Table III, which maps individual provisions within Public Laws to pages in the Statutes at Large and to sections of the US Code, exhibits a number of interesting problems. Here is how one such mapping appears in the LRC’s online tool:

In this case, we’re mapping the individual provisions of PL 110-108 (readable at http://www.gpo.gov/fdsys/pkg/PLAW-110publ108/html/PLAW-110publ108.htm ) to a range of pages in the Statutes at Large and to sections in the US Code (and their notes). The GPO version helpfully contains markers for the Stat. L. page breaks. Some noteworthy observations:

-

The Public Law needs section-level identifiers. Notes sections within the USC need their own identifiers, as do pages within the Statutes at Large.

-

Since the Stat. L. citation for the Act always goes to the first page of the Act as it appears in Stat.L., there is ambiguity between

-

121 Stat. 1024, the citation/identifier indicating the whole Act for purposes of external citation, and

-

121 Stat. 1024, the single-page reference that describes where Section 1 of the Act can be found (and for that matter, supposedly, some of Sections 2-6 as well)

-

For some time periods, chapter numbers would disambiguate individual laws where more than one statute appears on a single page, although as we have seen, chapter numbers have uniqueness problems of their own. Chapter numbers play no role in this example, as they were not used after 1957.

-

The Act is classified to the notes in the relevant USC sections.

-

In the case of section 1 of the Act, the notes simply state the name of the Act.

-

In the case of section 151, the entire text of the legislation appears in the notes for the Act. It would appear that it is done this way because the legislation’s provisions amount to a series of instructions for amending existing statutes, and thus can’t be codified per se. Rather, they are a description of what should be done to change things that have been codified already.

-

GPO’s MODS file is evidently created by machine extraction of USC citations, because it incorrectly identifies the Act as modifying 26 USC 4251. It’s possible, though, that the presence of a USC section in the MODS file might simply mean “found at the scene of the crime by our parser” rather than “changed by the Act”. The relationship is unclear, and may be impossible to express clearly in XML.

-

GPO’s MODS file for the Act treats the mapping implied by the second line of the example pretty loosely, describing small collections of US Code and Stat.L. pages associated with the Act, but not describing any particular relationship between the items in each collection or between collections. This is, again, a place where XML falls short of what is possible in an RDF-based, machine-readable model.

The second line of the table entry is the most interesting. At first glance, it appears to describe a many-to-many relationship between a range of sections in the Act and a range of pages in the Statutes at Large. But it seems improbable that such a relationship would actually describe anything useful, and a quick side-by-side look at the Act and the Statute shows that such an interpretation is incorrect. The actual arrangement of page breaks in Stat. L. would indicate that the mapping should be otherwise:

-

Section 2 appears in its entirety on 121 Stat 1024.

-

Section 3 spans the break between 1024 and 1025.

-

Section 4 spans 1025 and 1026

-

Sections 5 and 6 appear in their entirety on 1026

Why is that? The simplest explanation is that the entries in the table — numbers separated by a dash — do not represent lists of individual sections. Instead, they represent clusters of sections that are related to each other as clusters. They seem to be saying, “somewhere in this clump of legislative language, you’ll find things that relate to things in this other clump of legislative language, and the clumps span multiple sections or provisions, possibly ordered differently in each document”.

Looking at the text itself — which is a series of detailed, interrelated amending instructions — shows that indeed it would be a horrible (and likely very confusing) task to pick the provisions apart into a fully granular mapping, leaving “cluster-to-cluster” mapping as the only viable strategy for describing the relationship between the two texts.

A detailed model of Table III would then require:

-

describing each section or subsection (granule) within the Public Law as one that is either

-

describing each target in the USC as either

-

an actual statute, or

-

notes to the statute. It is worth remarking that, in any of the finding aids, the fact that something has been classified to the notes provides a clue as to what that thing is and what the nature of the classified relationship might be. This may indicate a need for subproperties that would be accommodated in some future extension.

-

distinguishing between relationships that involve re-publication (as between Public Laws and Statutes at Large) from those that involve restatement or codification (as between either of those and the US Code)

-

using different properties to describe provision-to-provision and cluster-to-cluster relationships

Taken together, these requirements would form an approach that would more accurately model the relationships the original Table was meant to model. In some sense this is an interpretive act — any Table that records codification decisions does, after all, record a set of interpretations, and so will its model. But in this case the interpretation is an official one, entrusted to the Law Revision Counsel and in any case practically unavoidable.

Table IV “lists the Executive Orders that implement general and permanent law as contained in the United States Code” . Executive Orders are instructions from the President mandating an action, reorganization, or policy change in some part of the executive branch. They are promulgated pursuant to statutory authority and as lawful orders of the chief executive, have force of law. They are published in the Federal Register and appear in the annual Compilation of Presidential Documents. They are sequentially numbered, but are also identified by date of signing, title, and the authoring president. All four of these identifying attributes are specified in the GPO MODS files which accompany these documents in FD/SYS. In addition, there exists a reference to the volume and issue number of the Weekly Compilation in which the order appears. Finally, the MODS files typically include a reference to the enabling law as well.

The Table shows that:

-

Executive Orders have identifiers, apparently accession numbers that run from the beginning of time.

-

Nearly all refer to the “notes” attached to sections of the USC, since (as the description says) Executive Orders are typically implementation instructions independent of the language of the statute itself.

References to the notes have special features worth remarking. Often, the mapping is given to the note preceding (“nt. prec.”) a particular section. That distinctive language is rooted in the way that the LRC conceives of the Code’s structure. In the minds of the LRC, the Code consists of Titles that are divided into sections. Intermediate levels of aggregation — subtitles, parts, subparts, chapters, and subchapters — are convenient fictions used to organize the material in a manner similar to the tabs found in a card catalog. Thus, the “note preceding” a section is most often a note that is attached to the chapter of which the section is a part (chapters are typically, but not always, the level that aggregates sections, and often correspond to an Act as a whole). As modelers, we’re presented with a choice between fictions: either we join LRC in pretending that the intermediate levels of aggregation don’t exist, or we make use of them. The latter presents other problems with representing parent-child relationships in the structure, but fortunately that is a concern for XML markup designers and not so much for us.

It would seem that the best approach might be to model both sets of relationships: a hierarchical structure based on aggregations, and a sequential structure suggested by the “insertion model” just described. In terms of the model, this is just a matter of making sure that identifiers are in place that will facilitate both approaches. The main issues raised by this approach have to do with XML markup and encoding; as with other corpora we have encountered (eg. the Congressional Record) user needs demand, and the model can accommodate, far more than the current publicly available XML encoding of the document will support.

Thus, we would end up with:

-

a set of unique identifiers for sections, based on title and section numbers and thus reflecting current citation practice;

-

a set of sub-section identifiers that extend section identifiers in a way that is based on nested subsection labeling.

-

a set of super-section identifiers that is based on human readable hierarchy, represented as paths, eg. “/uscode/title42/subtitle1/part3/subpart5/chapter7/subchapterA”

-

a set of completely opaque identifiers for both section and supersection levels. There is less need for this at the subsection level, but any such system could easily be extended;

-

parent-child relationships between

-

next-previous relationships between sections. These should take no account of supersection boundaries.

As we’ve said in other contexts, it is worthwhile to remember that nothing limits us to a single identifier for any object.

Table V maps Presidential proclamations to the US Code. Proclamations differ from Executive Orders in that they do not “legislate” as such. Rather, they are issued to commemorate a significant event, or other similar occasion. Like Executive Orders, they are published in the Federal Register, and appear in the Compilation of Presidential Documents. Like Executive Orders, they are sequentially numbered (without reference to year, president, etc.), and are also identified by date, title, issuing president, and the volume and issue number of the Weekly Compilation. All these identifiers are typically present in the GPO MODS files in FD/SYS.

Before 1950 or so, the vast majority of proclamations establish national monuments. More recently, other topics as diverse as the maintenance of the Strategic Petroleum Reserve, tariff schedules, and the celebration of Armed Forces Day show up frequently. As with Executive Orders and Table IV, the proclamations have accession numbers, and the vast majority of references are to notes attached to the Code and not the Code itself.

Table VI maps reorganization plans to the US Code. Reorganization plans are essentially executive orders that describe major alterations to executive-branch agencies and organization, though they do not carry executive-order identifiers. For example, Reorganization Plan 3 of 1970 establishes the Environmental Protection Agency and expands the structure of the National Oceanographic and Atmospheric Administration. Generally they carry citations to the Statutes at Large and to the Federal Register (the FR cite does not appear in Table VI). While no concise identifier exists for them in and of themselves, it appears that they could be identified by a year-number combination (eg. “RP-1970-3”). These associations can readily be modeled by associating an identifier for the plan itself with the page references, through one or more “isPublishedAt” relationships.

The Parallel Table of Authorities (PTOA)

The Parallel Table of Authorities and Rules describes relationships between statutes in the US Code and the CFR Parts that they authorize. For the most part, the PTOA maps ranges of sections in the US Code to lists of Parts in the Code of Federal Regulations. It has limitations, described by GPO as follows:

Entries in the table are taken directly from the rulemaking authority citation provided by Federal agencies in their regulations. Federal agencies are responsible for keeping these citations current and accurate. Because Federal agencies sometimes present these citations in an inconsistent manner, the table cannot be considered all-inclusive. The portion of the table listing the United States Code citations is the most comprehensive, as these citations are entered into the table whenever they are given in the authority citations provided by the agencies. United States Statutes at Large and public law citations are carried in the table only when there are no corresponding United States Code citations given.

The suggestions made here, then, are observations about a critically important finding aid, strongly related to legislative material, that is in need of some help. Thinking about the PTOA and the various ways in which modeling techniques such as the ones we recommend might improve it provides an interesting overview of the problems of legislative finding aids in general.

Richards and Bruce have written extensively about its organization and improvement. They note four major areas to address:

-

Ambiguity in the description of the relationships themselves. The Table supposedly models four different types of relationship: express authorization, implied authorization, interpretation, and application. These are not distinguished in the PTOA entries.

-

Ambiguity in relationship targeting. Entries on both sides of the table are typically given as ranges or lists, implying many-to-many relationships that can be combinatorially expanded. It is not clear whether, in fact, all the sections of the US Code that could be enumerated from a range on the left side of the table would relate to particular Parts of the CFR enumerated from the lists on the right side of the table. It seems unlikely.

-

Granularity problems related to citation of the CFR materials by Part. In reality, the authorizing relationship would typically run from a statute to a particular section of the CFR, but the targeting in the PTOA is to the Part containing that section. It is likely that this is not a problem with granularity so much as it is an informed design decision driven by problems with the volatility of section-level identifiers as compared to printed finding aids. Sections of the CFR come and go with some frequency, often moving around within an individual Part. Parts change far less frequently. In print, where updating is difficult and withdrawal of stale material even more so, identifier stability is a much bigger concern. It is possible that a digital resource could track things much more closely.

-

Directionality and reciprocity. It is not clear which of the four possible relationships between entries are reciprocal and which are strictly directional, nor is the Table necessarily intended to be used bidirectionally.

Unfortunately, improvement is unlikely, as it would require the collection of improved information from each of the hundreds of agencies involved. Nevertheless, a simplified model can provide at least some useful information. The LII currently models the PTOA as a single relationship between individual pairs of identifiers, asserting that each pair in a combinatorial expansion of entries on each side of the table has some such relationship. That is undoubtedly imprecise, but it is as good as anything currently available and far better than nothing.

As always, we close with a musical selection.

References

General

Other papers

-

Richards, Robert, and Thomas Bruce, “Adapting Specialized Legal Material to the Digital Environment: The Code of Federal Regulations Parallel Table of Authorities and Rules”, in ICAIL ‘11: Proceedings of the 13th International Conference on Artificial Intelligence and Law. A slide deck based on the presentation is at http://liicr.nl/M7QyTG .

Finding aids

[ Special thanks to Mohammad AL-Asswad, Stevan Gostojic, Sara Frug, Rob Sukol, and of course to Ralph Seep, who contributed many ideas to this.]

[ Special thanks to Mohammad AL-Asswad, Stevan Gostojic, Sara Frug, Rob Sukol, and of course to Ralph Seep, who contributed many ideas to this.]

[This is part 3 of a three-part post on identifiers. Here are parts

[This is part 3 of a three-part post on identifiers. Here are parts