This is part of a series of posts about LII’s accessibility compliance initiative. The “accessibility” tag links to the posts in this series-in-progress.

LII’s CFR – why bother re-publishing?

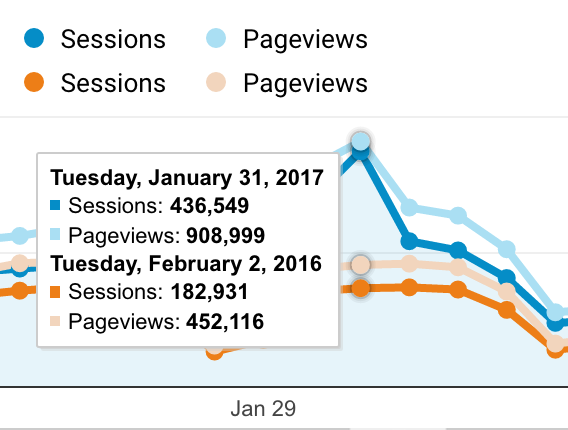

A bit of history: for many years, LII published a finding aid for CFR, which offered tables of contents and facilitated a quick lookup by part and section from the eCFR website. This might not sound like much, but at the time, it was the only practical way to link to or bookmark CFR. In 2010, we undertook a joint study with the Office of the Federal Register, GPO, and the Federal Depository Library Program to convert CFR typesetting code to XML. This project resulted in our publication of an enhanced version of the print-CFR. But there was an enormous demand for the eCFR, which, unlike the print-CFR is updated to within a few days of final-rule publication in the Federal Register, so, in 2015, when the eCFR became available in XML, we started from scratch and re-built our enhanced CFR from that. Nowadays, our CFR reaches about 12 million people each year. If our readers reflect the U.S. population, this would mean more than 300,000 users with visual disabilities are relying on our CFR.

So, what’s the problem?

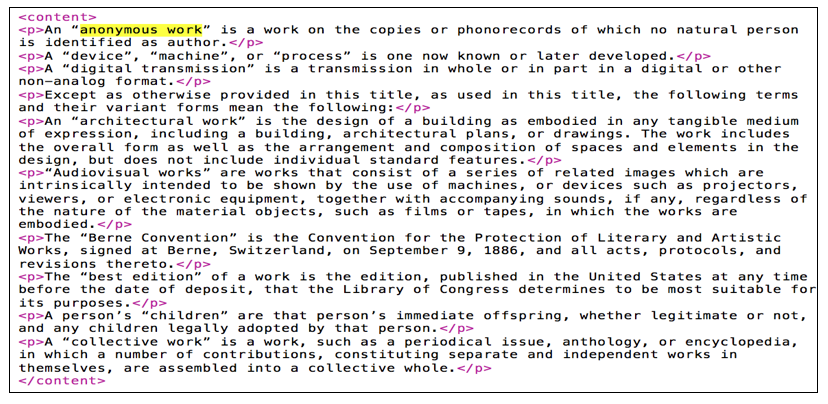

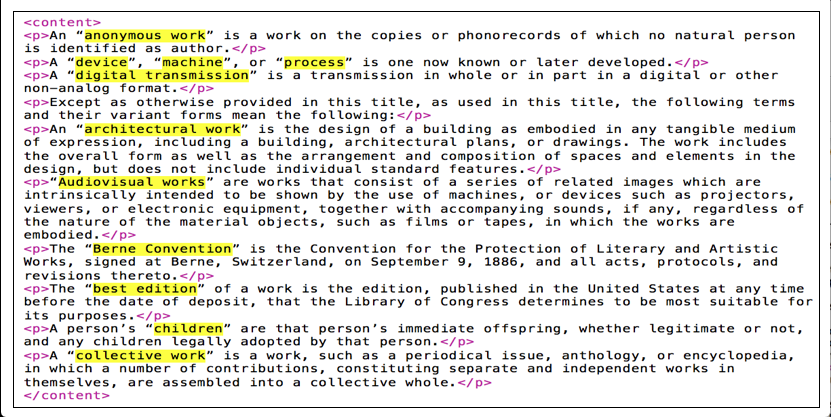

Simply put, we don’t control the content of CFR. The government publishes it, and we are at their mercy if there’s a problem with the content. And there is a problem with the content, a big one. Or, at last count, about 15,639 big ones. The problem is images. These are not just the kinds of images we’d assume (like diagrams), but images of equations, forms, data tables, even images of whole documents. Usually their captions, if even present, convey next to none of the information the images contain. Sometimes the images are printed sideways. Often they are blurry. Never are they machine-readable.

Now, you might say that remediating this problem should be the responsibility of the publisher. But we don’t have time. Our accessibility compliance target is the end of 2019 (see earlier post), and we can’t realistically expect the government to remediate in time.

Public.Resource.Org to the rescue

While we’ve been working on enhancing CFR for publication, Public.Resource.Org has been testing the limits of free access to law, challenging copyright claims to codes and standards incorporated by reference. Founder and president Carl Malamud is famous for saying “Law is the operating system of our society … So show me the manual!” The “manual” is often a very detailed explanation of what the law requires in practice. Together with Point.B Studio, they have converted innumerable codes and standards into machine-readable formats, including transforming pixel-images to Scalable Vector Graphics.

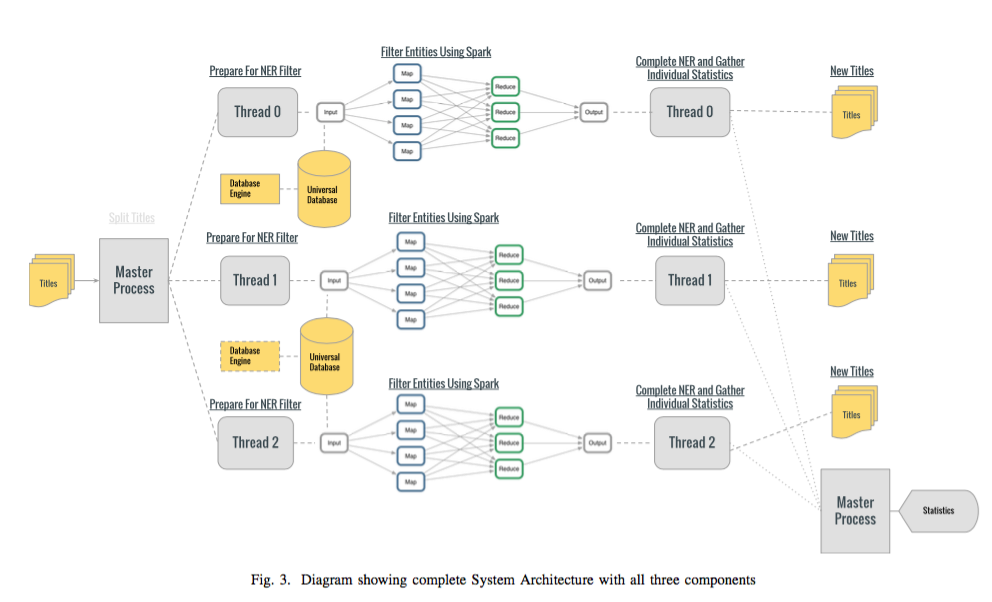

When we realized that we were going to need to deal with more than 15,000 images, our first step was to ask Carl whether he minded if we used the 1237 CFR images Public.Resource.Org converted in 2016-2017. He very enthusiastically encouraged us to go ahead and, beyond that, offered to support the conversion of more of the images. We provided an inventory of where in the CFR the images appear and how much traffic those pages get. Point.B Studio undertook the monumental task of first sorting the images by category and then converting the most prominent diagrams into SVG and equations into XML. In addition to support from Public Resource, Justia is helping to support this work by Point.B Studio as well.

We’ll be annotating the SVGs with standardized labels and titles. We want to deliver the results of this work to the public as quickly as possible, so we’re releasing the new content as it becomes available.

Step 1: Math is hard, let’s use MathJax

Close to 40% of the images in CFR involve some type of equation. Fortunately, MathML, an XML standard developed in the late 1990s, can be embedded in web pages. The American Mathematical Society (AMS) and the Society for Industrial and Applied Mathematics (SIAM) have teamed up to create MathJax, a Javascript plugin that lets us turn machine-readable MathML into readable, screen-reader accessible web content. Public.Resource.Org and Point.B Studio are converting pictures-of-equations into MathML; our website is using MathJax to provide visual and screen-reader-accessible presentations of the markup. You can see an example at 34 CFR 685.203.

Steps 2 and beyond

In the coming weeks and months, we’ll be remediating accessibility problems and releasing accessible images for eCFR. Stay tuned for updates here and via Twitter.