VoxPopuLII

Van Winkle wakes

In this post, we return to a topic we first visited in a book chapter in 2004. At that time, one of us (Bruce) was an electronic publisher of Federal court cases and statutes, and the other (Hillmann, herself a former law cataloger) was working with large, aggregated repositories of scientific papers as part of the National Sciences Digital Library project. Then, as now, we were concerned that little attention was being paid to the practical tradeoffs involved in publishing high quality metadata at low cost. There was a tendency to design metadata schemas that said absolutely everything that could be said about an object, often at the expense of obscuring what needed to be said about it while running up unacceptable costs. Though we did not have a name for it at the time, we were already deeply interested in least-cost, use-case-driven approaches to the design of metadata models, and that naturally led us to wonder what “good” metadata might be. The result was “The Continuum of Metadata Quality: Defining, Expressing, Exploiting”, published as a chapter in an ALA publication, Metadata in Practice.

In that chapter, we attempted to create a framework for talking about (and evaluating) metadata quality. We were concerned primarily with metadata as we were then encountering it: in aggregations of repositories containing scientific preprints, educational resources, and in caselaw and other primary legal materials published on the Web. We hoped we could create something that would be both domain-independent and useful to those who manage and evaluate metadata projects. Whether or not we succeeded is for others to judge.

The Original Framework

At that time, we identified seven major components of metadata quality. Here, we reproduce a part of a summary table that we used to characterize the seven measures. We suggested questions that might be used to draw a bead on the various measures we proposed:

| Quality Measure | Quality Criteria |

| Completeness | Does the element set completely describe the objects? Are all relevant elements used for each object? |

| Provenance | Who is responsible for creating, extracting, or transforming the metadata? How was the metadata created or extracted? What transformations have been done on the data since its creation? |

| Accuracy | Have accepted methods been used for creation or extraction? What has been done to ensure valid values and structure? Are default values appropriate, and have they been appropriately used? |

| Conformance to expectations | Does metadata describe what it claims to? Are controlled vocabularies aligned with audience characteristics and understanding of the objects? Are compromises documented and in line with community expectations? |

| Logical consistency and coherence | Is data in elements consistent throughout? How does it compare with other data within the community? |

| Timeliness | Is metadata regularly updated as the resources change? Are controlled vocabularies updated when relevant? |

| Accessibility | Is an appropriate element set for audience and community being used? Is it affordable to use and maintain? Does it permit further value-adds? |

There are, of course, many possible elaborations of these criteria, and many other questions that help get at them. Almost nine years later, we believe that the framework remains both relevant and highly useful, although (as we will discuss in a later section) we need to think carefully about whether and how it relates to the quality standards that the Linked Open Data (LOD) community is discovering for itself, and how it and other standards should affect library and publisher practices and policies.

… and the environment in which it was created

Our work was necessarily shaped by the environment we were in. Though we never really said so explicitly, we were looking for quality not only in the data itself, but in the methods used to organize, transform and aggregate it across federated collections. We did not, however, anticipate the speed or scale at which standards-based methods of data organization would be applied. Commonly-used standards like FOAF, models such as those contained in schema.org, and lightweight modelling apparatus like SKOS are all things that have emerged into common use since, and of course the use of Dublin Core — our main focus eight years ago — has continued even as the standard itself has been refined. These days, an expanded toolset makes it even more important that we have a way to talk about how well the tools fit the job at hand, and how well they have been applied. An expanded set of design choices accentuates the need to talk about how well choices have been made in particular cases.

Although our work took its inspiration from quality standards developed by a government statistical service, we had not really thought through the sheer multiplicity of information services that were available even then. We were concerned primarily with work that had been done with descriptive metadata in digital libraries, but of course there were, and are, many more people publishing and consuming data in both the governmental and private sectors (to name just two). Indeed, there was already a substantial literature on data quality that arose from within the management information systems (MIS) community, driven by concerns about the reliability and quality of mission-critical data used and traded by businesses. In today’s wider world, where work with library metadata will be strongly informed by the Linked Open Data techniques developed for a diverse array of data publishers, we need to take a broader view.

Finally, we were driven then, as we are now, by managerial and operational concerns. As practitioners, we were well aware that metadata carries costs, and that human judgment is expensive. We were looking for a set of indicators that would spark and sustain discussion about costs and tradeoffs. At that time, we were mostly worried that libraries were not giving costs enough attention, and were designing metadata projects that were unrealistic given the level of detail or human intervention they required. That is still true. The world of Linked Data requires well-understood metadata policies and operational practices simply so publishers can know what is expected of them and consumers can know what they are getting. Those policies and practices in turn rely on quality measures that producers and consumers of metadata can understand and agree on. In today’s world — one in which institutional resources are shrinking rather than expanding — human intervention in the metadata quality assessment process at any level more granular than that of the entire data collection being offered will become the exception rather than the rule.

While the methods we suggested at the time were self-consciously domain-independent, they did rest on background assumptions about the nature of the services involved and the means by which they were delivered. Our experience had been with data aggregated by communities where the data producers and consumers were to some extent known to one another, using a fairly simple technology that was easy to run and maintain. In 2013, that is not the case; producers and consumers are increasingly remote from each other, and the technologies used are both more complex and less mature, though that is changing rapidly.

The remainder of this blog post is an attempt to reconsider our framework in that context.

The New World

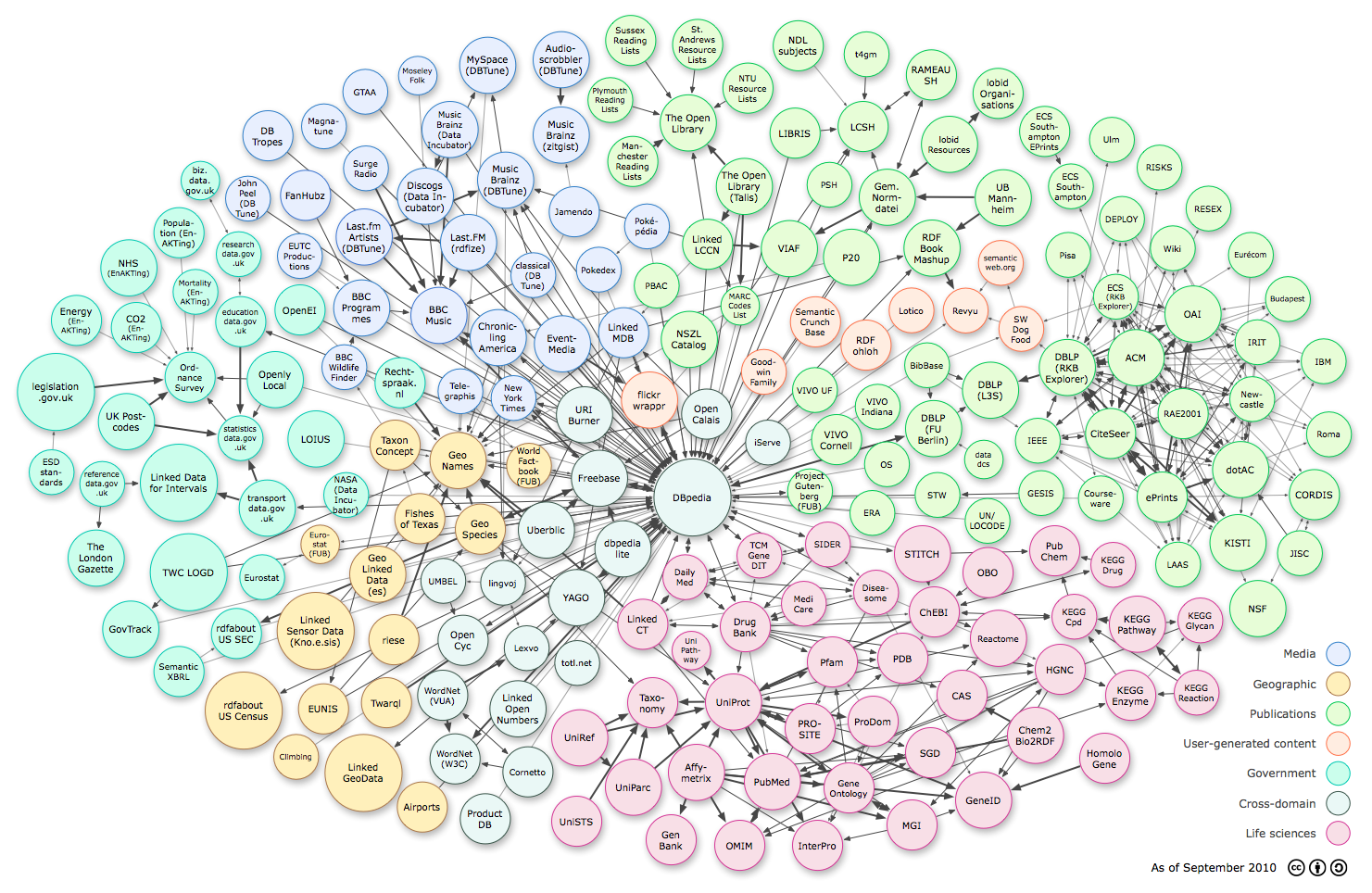

The Linked Open Data (LOD) community has begun to consider quality issues; there are some noteworthy online discussions, as well as workshops resulting in a number of published papers and online resources. It is interesting to see where the work that has come from within the LOD community contrasts with the thinking of the library community on such matters, and where it does not.

The Linked Open Data (LOD) community has begun to consider quality issues; there are some noteworthy online discussions, as well as workshops resulting in a number of published papers and online resources. It is interesting to see where the work that has come from within the LOD community contrasts with the thinking of the library community on such matters, and where it does not.

In general, the material we have seen leans toward the traditional data-quality concerns of the MIS community. LOD practitioners seem to have started out by putting far more emphasis than we might on criteria that are essentially audience-dependent, and on operational concerns having to do with the reliability of publishing and consumption apparatus. As it has evolved, the discussion features an intellectual move away from those audience-dependent criteria, which are usually expressed as “fitness for use”, “relevance”, or something of the sort (we ourselves used the phrase “community expectations”). Instead, most realize that both audience and usage are likely to be (at best) partially unknown to the publisher, at least at system design time. In other words, the larger community has begun to grapple with something librarians have known for a while: future uses and the extent of dissemination are impossible to predict. There is a creative tension here that is not likely to go away. On the one hand, data developed for a particular community is likely to be much more useful to that community; thus our initial recognition of the role of “community expectations”. On the other, dissemination of the data may reach far past the boundaries of the community that develops and publishes it. The hope is that this tension can be resolved by integrating large data pools from diverse sources, or by taking other approaches that result in data models sufficiently large and diverse that “community expectations” can be implemented, essentially, by filtering.

For the LOD community, the path that began with “fitness-for-use” criteria led quickly to the idea of maintaining a “neutral perspective”. Christian Fürber describes that perspective as the idea that “Data quality is the degree to which data meets quality requirements no matter who is making the requirements”. To librarians, who have long since given up on the idea of cataloger objectivity, a phrase like “neutral perspective” may seem naive. But it is a step forward in dealing with data whose dissemination and user community is unknown. And it is important to remember that the larger LOD community is concerned with quality in data publishing in general, and not solely with descriptive metadata, for which objectivity may no longer be of much value. For that reason, it would be natural to expect the larger community to place greater weight on objectivity in their quality criteria than the library community feels that it can, with a strong preference for quantitative assessment wherever possible. Librarians and others concerned with data that involves human judgment are theoretically more likely to be concerned with issues of provenance, particularly as they concern who has created and handled the data. And indeed that is the case.

The new quality criteria, and how they stack up

Here is a simplified comparison of our 2004 criteria with three views taken from the LOD community.

| Bruce & Hillmann | Dodds, McDonald | Flemming |

| Completeness | Completeness Boundedness Typing |

Amount of data |

| Provenance | History Attribution Authoritative |

Verifiability |

| Accuracy | Accuracy Typing |

Validity of documents |

| Conformance to expectations | Modeling correctness Modeling granularity Isomorphism |

Uniformity |

| Logical consistency and coherence | Directionality Modeling correctness Internal consistency Referential correspondence Connectedness |

Consistency |

| Timeliness | Currency | Timeliness |

| Accessibility | Intelligibility Licensing Sustainable |

Comprehensibility Versatility Licensing |

| Accessibility (technical) Performance (technical) |

Placing the “new” criteria into our framework was no great challenge; it appears that we were, and are, talking about many of the same things. A few explanatory remarks:

- Boundedness has roughly the same relationship to completeness that precision does to recall in information-retrieval metrics. The data is complete when we have everything we want; its boundedness shows high quality when we have only what we want.

- Flemming’s amount of data criterion talks about numbers of triples and links, and about the interconnectedness and granularity of the data. These seem to us to be largely completeness criteria, though things to do with linkage would more likely fall under “Logical coherence” in our world. Note, again, a certain preoccupation with things that are easy to count. In this case it is somewhat unsatisfying; it’s not clear what the number of triples in a triplestore says about quality, or how it might be related to completeness if indeed that is what is intended.

- Everyone lists criteria that fit well with our notions about provenance. In that connection, the most significant development has been a great deal of work on formalizing the ways in which provenance is expressed. This is still an active level of research, with a lot to be decided. In particular, attempts at true domain independence are not fully successful, and will probably never be so. It appears to us that those working on the problem at DCMI are monitoring the other efforts and incorporating the most worthwhile features.

- Dodds’ typing criterion — which basically says that dereferenceable URIs should be preferred to string literals — participates equally in completeness and accuracy categories. While we prefer URIs in our models, we are a little uneasy with the idea that the presence of string literals is always a sign of low quality. Under some circumstances, for example, they might simply indicate an early stage of vocabulary evolution.

- Flemming’s verifiability and validity criteria need a little explanation, because the terms used are easily confused with formal usages and so are a little misleading. Verifiability bundles a set of concerns we think of as provenance. Validity of documents is about accuracy as it is found in things like class and property usage. Curiously, none of Flemming’s criteria have anything to do with whether the information being expressed by the data is correct in what it says about the real world; they are all designed to convey technical criteria. The concern is not with what the data says, but with how it says it.

- Dodds’ modeling correctness criterion seems to be about two things: whether or not the model is correctly constructed in formal terms, and whether or not it covers the subject domain in an expected way. Thus, we assign it to both “Community expectations” and “Logical coherence” categories.

- Isomorphism has to do with the ability to join datasets together, when they describe the same things. In effect, it is a more formal statement of the idea that a given community will expect different models to treat similar things similarly. But there are also some very tricky (and often abused) concepts of equivalence involved; these are just beginning to receive some attention from Semantic Web researchers.

- Licensing has become more important to everyone. That is in part because Linked Data as published in the private sector may exhibit some of the proprietary characteristics we saw as access barriers in 2004, and also because even public-sector data publishers are worried about cost recovery and appropriate-use issues. We say more about this in a later section.

- A number of criteria listed under Accessibility have to do with the reliability of data publishing and consumption apparatus as used in production. Linked Data consumers want to know that the endpoints and triple stores they rely on for data are going to be up and running when they are needed. That brings a whole set of accessibility and technical performance issues into play. At least one website exists for the sole purpose of monitoring endpoint reliability, an obvious concern of those who build services that rely on Linked Data sources. Recently, the LII made a decision to run its own mirror of the DrugBank triplestore to eliminate problems with uptime and to guarantee low latency; performance and accessibility had become major concerns. For consumers, due diligence is important.

For us, there is a distinctly different feel to the examples that Dodds, Flemming, and others have used to illustrate their criteria; they seem to be looking at a set of phenomena that has substantial overlap with ours, but is not quite the same. Part of it is simply the fact, mentioned earlier, that data publishers in distinct domains have distinct biases. For example, those who can’t fully believe in objectivity are forced to put greater emphasis on provenance. Others who are not publishing descriptive data that relies on human judgment feel they can rely on more “objective” assessment methods. But the biggest difference in the “new quality” is that it puts a great deal of emphasis on technical quality in the construction of the data model, and much less on how well the data that populates the model describes real things in the real world.

There are three reasons for that. The first has to do with the nature of the discussion itself. All quality discussions, simply as discussions, seem to neglect notions of factual accuracy because factual accuracy seems self-evidently a Good Thing; there’s not much to talk about. Second, the people discussing quality in the LOD world are modelers first, and so quality is seen as adhering primarily to the model itself. Finally, the world of the Semantic Web rests on the assumption that “anyone can say anything about anything”, For some, the egalitarian interpretation of that statement reaches the level of religion, making it very difficult to measure quality by judging whether something is factual or not; from a purist’s perspective, it’s opinions all the way down. There is, then, a tendency to rely on formalisms and modeling technique to hold back the tide.

In 2004, we suggested a set of metadata-quality indicators suitable for managers to use in assessing projects and datasets. An updated version of that table would look like this:

| Quality Measure | Quality Criteria |

| Completeness | Does the element set completely describe the objects? Are all relevant elements used for each object? Does the data contain everything you expect? Does the data contain only what you expect? |

| Provenance | Who is responsible for creating, extracting, or transforming the metadata? How was the metadata created or extracted? What transformations have been done on the data since its creation? Has a dedicated provenance vocabulary been used? Are there authenticity measures (eg. digital signatures) in place? |

| Accuracy | Have accepted methods been used for creation or extraction? What has been done to ensure valid values and structure? Are default values appropriate, and have they been appropriately used? Are all properties and values valid/defined? |

| Conformance to expectations | Does metadata describe what it claims to? Does the data model describe what it claims to? Are controlled vocabularies aligned with audience characteristics and understanding of the objects? Are compromises documented and in line with community expectations? |

| Logical consistency and coherence | Is data in elements consistent throughout? How does it compare with other data within the community? Is the data model technically correct and well structured? Is the data model aligned with other models in the same domain? Is the model consistent in the direction of relations? |

| Timeliness | Is metadata regularly updated as the resources change? Are controlled vocabularies updated when relevant? |

| Accessibility | Is an appropriate element set for audience and community being used? Is the data and its access methods well-documented, with exemplary queries and URIs? Do things have human-readable labels? Is it affordable to use and maintain? Does it permit further value-adds? Does it permit republication? Is attribution required if the data is redistributed? Are human- and machine-readable licenses available? |

| Accessibility — technical | Are reliable, performant endpoints available? Will the provider guarantee service (eg. via a service level agreement)? Is the data available in bulk? Are URIs stable? |

The differences in the example questions reflect the differences of approach that we discussed earlier. Also, the new approach separates criteria related to technical accessibility from questions that relate to intellectual accessibility. Indeed, we suspect that “accessibility” may have been too broad a notion in the first place. Wider deployment of metadata systems and a much greater, still-evolving variety of producer-consumer scenarios and relationships have created a need to break it down further. There are as many aspects to accessibility as there are types of barriers — economic, technical, and so on.

As before, our list is not a checklist or a set of must-haves, nor does it contain all the questions that might be asked. Rather, we intend it as a list of representative questions that might be asked when a new Linked Data source is under consideration. They are also questions that should inform policy discussion around the uses of Linked Data by consuming libraries and publishers.

That is work that can be formalized and taken further. One intriguing recent development is work toward a Data Quality Management Vocabulary. Its stated aims are to

- support the expression of quality requirements in the same language, at web scale;

- support the creation of consensual agreements about quality requirements

- increase transparency around quality requirements and measures

- enable checking for consistency among quality requirements, and

- generally reduce the effort needed for data quality management activities

The apparatus to be used is a formal representation of “quality-relevant” information. We imagine that the researchers in this area are looking forward to something like automated e-commerce in Linked Data, or at least a greater ability to do corpus-level quality assessment at a distance. Of course, “fitness-for-use” and other criteria that can really only be seen from the perspective of the user will remain important, and there will be interplay between standardized quality and performance measures (on the one hand) and audience-relevant features on the other. One is rather reminded of the interplay of technical specifications and “curb appeal” in choosing a new car. That would be an important development in a Semantic Web industry that has not completely settled on what a car is really supposed to be, let alone how to steer or where one might want to go with it.

Conclusion

Libraries have always been concerned with quality criteria in their work as a creators of descriptive metadata. One of our purposes here has been to show how those criteria will evolve as libraries become publishers of Linked Data, as we believe that they must. That much seems fairly straightforward, and there are many processes and methods by which quality criteria can be embedded in the process of metadata creation and management.

More difficult, perhaps, is deciding how these criteria can be used to construct policies for Linked Data consumption. As we have said many times elsewhere, we believe that there are tremendous advantages and efficiencies that can be realized by linking to data and descriptions created by others, notably in connecting up information about the people and places that are mentioned in legislative information with outside information pools. That will require care and judgement, and quality criteria such as these will be the basis for those discussions. Not all of these criteria have matured — or ever will mature — to the point where hard-and-fast metrics exist. We are unlikely to ever see rigid checklists or contractual clauses with bullet-pointed performance targets, at least for many of the factors we have discussed here. Some of the new accessibility criteria might be the subject of service-level agreements or other mechanisms used in electronic publishing or database-access contracts. But the real use of these criteria is in assessments that will be made long before contracts are negotiated and signed. In that setting, these criteria are simply the lenses that help us know quality when we see it.

References

- Bruce, Thomas R., and Diane Hillmann (2004). “The Continuum of Metadata Quality: Defining, Expressing, Exploiting”. In Metadata in Practice, Hillmann and Westbrooks, eds. Online at http://www.ecommons.cornell.edu/handle/1813/7895

- DCMI Metadata Provenance Task Group, at http://dublincore.org/groups/provenance/ .

- Dodds, Leigh (2010) “Quality Indicators for Linked Data Datasets”. Online posting at http://answers.semanticweb.com/questions/1072/quality-indicators-for-linked-data-datasets .

- Flemming, Annika (2010) “Quality Criteria for Linked Data Sources”. Online at http://sourceforge.net/apps/mediawiki/trdf/index.php?title=Quality_Criteria_for_Linked_Data_sources&action=history

- Fürber, Christian, and Martin Hepp (2011).”Towards a Vocabulary for Data Quality Management in Semantic Web Architectures”. Presentation at the First International Workshop on Linked Web Data Management, Uppsala, Sweden. Online at http://www.slideshare.net/cfuerber/towards-a-vocabulary-for-data-quality-management-in-semantic-web-architectures .

- W3C, “Provenance Vocabulary Mappings”. At http://www.w3.org/2005/Incubator/prov/wiki/Provenance_Vocabulary_Mappings .

Thomas R. Bruce is the Director of the Legal Information Institute at the Cornell Law School.

Diane Hillmann is a principal in Metadata Management Associates, and a long-time collaborator with the Legal Information Institute. She is currently a member of the Advisory Board for the Dublin Core Metadata Initiative (DCMI), and was co-chair of the DCMI/RDA Task Group.

VoxPopuLII is edited by Judith Pratt. Editors-in-Chief are Stephanie Davidson and Christine Kirchberger, to whom queries should be directed.

[…] VoxPopuLII – it had to do with metadata quality concerns that are not limited to legislation — was posted there yesterday. We’ll continue to adapt the white papers as blog posts and release them as Metasausage posts, […]

[…] there are too many ontologies to choose from, leaving room for competing models or syntaxes. Metadata Quality in a Linked Data Context (2013) also cautions that in order for publishers of linked data to know what is expected of them […]

[…] Bruce, Thomas R., and Diane Hillmann. “Metadata Quality in a Linked Data Context.” VoxPopuLII, January 24, 2013. http://blog.law.cornell.edu/voxpop/2013/01/24/metadata-quality-in-a-linked-data-context/. […]

I haven’t fully digested this yet, but it seems to me that there is very little guidance about links and URLs for a discussion about Linked Data.