[ Ed. note: two of Professor Perritt’s papers have strongly influenced the LII. We recommend them here as “extra credit” reading. “Federal Electronic Information Policy” provides the rationale on which the LII has always built its innovations. “Should Local Governments Sell Local Spatial Databases Through State Monopolies?” unpacks issues around the resale of public information that are still with us (and likely always will be )]

In 1990 something called the “Internet” was just becoming visible to the academic community. Word processing on small proprietary networks had gained traction in most enterprises, and PCs had been more than toys for about five years. The many personal computing magazines predicted that each year would be “the Year of the LAN”–local area network–but the year of the LAN always seemed to be next year. The first edition of my How to Practice Law With Computers, published in 1988, said that email could be useful to lawyers and had a chapter on Westlaw and Lexis. It predicted that electronic exchange of documents over wide-area networks would be useful, as would electronic filing of documents, but the word “Internet” did not appear in the index. My 1991 Electronic Contracting, Publishing and EDI Law, co-authored with Michael Baum, focused on direct mainframe connections for electronic commerce between businesses, but barely mentioned the Internet. The word Internet did appear in the index, but was mentioned in only two sentences in 871 pages.

Then, in 1990, The Kennedy school at Harvard University held a conference on the future of the Internet. Computer scientists from major universities, Department of Defense officials, and a handful of representatives of commercial entities considered how to release the Internet from its ties to defense and university labs and to embrace the growing desire to exploit it commercially. I was fortunate to be on sabbatical leave at the Kennedy School and to be one of the few lawyers participating in the conference. In a chapter of a book published by Harvard afterwards on market structures, I said, “A federally sponsored high-speed digital network with broad public, non-profit and private participation presents the possibility of a new kind of market for electronic information products, one in which the features of information products are ‘unbundled’ and assembled on a network.”

The most important insight from the 1990 conference was that the Internet would permit unbundling of value. My paper for the Harvard conference and a law review article I published shortly thereafter in the Harvard Journal of Law and Technology talked about ten separate value elements, ranging from content to payment systems, with various forms of indexing and retrieval in the middle. The Internet meant that integrated products were a thing of the past; you didn’t have to go inside separate walled gardens to shop. You didn’t have to pay for West’s key numbering system in order to get the text of a judicial opinion written by a public employee on taxpayer time. Soon, you wouldn’t have to buy the whole music album with nine songs you didn’t particularly like in order to get the one song you wanted. Eventually, you wouldn’t have to buy the whole cable bundle in order to get the History Channel, or to be a Comcast cable TV subscriber to get a popular movie or the Super Bowl streamed to your mobile device.

A handful of related but separate activities developed some of the ideas from the Harvard conference further. Ron Staudt, Peter Martin, Tom Bruce, and I experimented with unbundling of legal information on small servers connected to the Internet to permit law students, lawyers, and members of public to obtain access to court decisions, statutes, and administrative agency decisions in new ways. Cornell’s Legal Information Institute was the result.

David Johnson, Ron Plesser, Jerry Berman, Bob Gellman, Peter Weiss, and I worked to shape the public discourse on how the law should channel new political and economic currents made possible by the Internet. Larry Lessig was a junior recruit to some of these earliest efforts, and he went on to be the best of us all in articulating a philosophy.

By 1996, I wrote a supplement to How to Practice Law With Computers, called Internet Basics for Lawyers, which encouraged lawyers to use the Internet for email and eventually to deliver legal services and to participate in litigation and agency rulemaking and adjudication. In the same year, I published a law review article explaining how credit-card dispute resolution procedures protected consumers in ecommerce.

One by one, the proprietary bastions fell—postal mail, libraries, bookstores, department stores, government agency reading rooms–as customers chose the open and ubiquitous over the closed and incompatible. Now, only a few people remember MCImail, Western Union’s EasyLink, dial up Earthlink, or CompuServe. AOL is a mere shadow of its former self, trying to grab the tail of the Internet that it too long resisted. The Internet gradually absorbed not only libraries and government book shops but also consumer markets and the legislative and adjudicative processes. Blockbuster video stores are gone. Borders Books is gone. The record labels are mostly irrelevant. Google, Amazon, and Netflix are crowding Hollywood. Millions of small merchants sell their goods every second on Amazon and eBay The shopping malls are empty. Amazon is building brick-and-mortar fulfillment warehouses all over the place. Tens of millions of artists are able to show their work on YouTube.

Now the Internet is well along in absorbing television and movies, and has begun absorbing the telephone system and two-way radio. Video images move as bit streams within IP packets. The rate at which consumers are cutting the cord and choosing to watch their favorite TV shows or the latest Hollywood blockbusters through the Internet is dramatic.

Television and other video entertainment are filling up the Internet’s pipes. New content delivery networks bypass the routers and links that serve general Internet users in the core of the Internet. But the most interesting engineering developments relate to the edge of the Internet, not its core. “Radio Access Networks,” including cellphone providers, are rushing to develop micro-, nano-, pico-, and femto-cells beyond the traditional cell towers to offload some of the traffic. Some use Wi-Fi, and some use licensed spectrum with LTE cellphone modulation. Television broadcasters meanwhile are embracing ATSC 3.0, which will allow their hundred megawatt transmitters to beam IP traffic over their footprint areas and – a first for television – to be able to charge subscribers for access.

The telephone industry and the FCC both have acknowledged that within a couple of years the public telephone system will no longer be the Public Switched Telephone System; circuit switches will be replaced completely by IP routers.

Already, the infrastructure for land mobile radio (public safety and industrial and commercial services) comprises individual handsets and other mobile transceivers communicating by VHF and UHF radio with repeater site or satellites, tied together through the Internet.

Four forces have shaped success: Conservatism, Catastrophe forecasts, Keepers of the Commons, and Capitalism. Conservatism operates by defending the status quo and casting doubt about technology’s possibilities. Opponents of technology have never been shy. A computer on every desk? “Never happen,” the big law firms said. “We don’t want our best and brightest young lawyers to be typists.”

Communicate by email? ”It would be unethical,” dozens of CLE presenters said. “Someone might read the emails in transit while they are resting on store-and-forward servers.” (The email technology of the day did not use store-and-forward servers.).

Buy stuff online? “It’s a fantasy,” the commercial lawyers said. “No one will trust a website with her credit card number. Someone will have to invent a whole new form of cybermoney.”

Catastrophe has regularly been forecast. “Social interaction will atrophy. Evil influences will ruin our kids. Unemployment will skyrocket,” assistant professors eager for tenure and journalists jockeying to lead the evening news warned. “The Internet is insecure!” cybersecurity experts warned. “We’ve got to stick with paper and unplugged computers.” The innovators went ahead anyway and catastrophe did not happen. A certain level of hysteria about how new technologies will undermine life is normal. It is always easier to ring alarm bells than to understand the technology and think about its potential.

Keepers of the Commons—the computer scientists who invented the Internet—articulated two philosophies, which proved more important than engineering advances in enabling the Internet to replace one after another of preceding ways of organizing work, play, and commerce. To be sure, new technologies mattered. Faster, higher quality printers were crucial in placing small computers and the Internet at the heart of new virtual libraries, first Westlaw and Lexis and then and then Google and Amazon. Higher speed modems and the advanced modulation schemes they enabled made it faster to retrieve an online resource than to walk to the library and pull the same resource off the shelf. One-click ordering made e-commerce more attractive. More than 8,000 RFCs define technology standards for the Internet.

The philosophies shaped use of the technologies. The first was the realization that an open architecture opens up creative and economic opportunities for millions of individuals and small businesses that otherwise were barred from the marketplace by high barriers to entry. Second was the realization that being able to contribute creatively can be powerful motivators for activity, alongside expectations of profit. The engineers who invented the Internet have been steadfast in protecting the commons: articulating the Internet’s philosophy of indifference to content, leaving application development for the territory beyond its edges, and contributing untold hours to the development of open standards called “Requests for Comment” (“RFCs”). Continued work on RFCs, services like Wikipedia and LII, and posts to YouTube show that being able to contribute is a powerful motivator, regardless of whether you make any money. Many of these services prove that volunteers can add a great deal of value to the marketplace, with higher quality, often, than commercial vendors.

Capitalism has operated alongside the Commons, driving the Internet’s buildout and flourishing as a result. Enormous fortunes have been made in Seattle and Silicon Valley. Many of the largest business enterprises in the world did not exist in 1990.

Internet Capitalism was embedded in evangelism. The fortunes resulted from revolutionary ideas, not from a small-minded extractive philosophy well captured by the song “Master of the House” in the musical play, Les Miserables:

Nothing gets you nothing, everything has got a little price

Reasonable charges plus some little extras on the side

Charge ‘em for the lice, extra for the mice,

Two percent for looking in the mirror twice

Here a little slice, there a little cut,

Three percent for sleeping with the window shut.

Throughout most of the 1990s, the established, legacy firms were Masters of the House, unwilling to let the smallest sliver of an intellectual property escape their clutches without a payment of some kind. They reinforced the walls around their asset gardens and recruited more tolltakers than creative talent. The gates into the gardens work differently, but each charges a toll.

Meanwhile, the Apples, Googles, and Amazons of the world flourished because they offered something completely different–more convenient and more tailored to the way that consumers wanted to do things. Nobody ever accused Steve Jobs of giving away much for free or being shy in his pricing, but he made it clear that when you bought something from him you were buying something big and something new.

The tension between Commons and Capitalism continues. In the early days, it was contest between those who wanted to establish a monopoly over some resource – governmental information such as patent office records, Securities and Exchange Commission filings, or judicial opinions and statutes –and new entrants who fought to pry open new forms of access. Now the video entertainment industry’s Master of the House habits are getting in the way of the necessary adaptation to cord cutting, big time. The video entertainment industry is scrambling to adapt its business models.

Intellectual property law can be an incentive to innovate, but it also represents a barrier to innovation. Throughout much of the 1980s, when the Internet was taking shape, law was uncertain whether either patent or copyright was available for computer software. Yet new businesses by the hundreds of thousands flocked to offer the fruits of their innovative labors to the marketplace. To be sure, as Internet related industries matured, their managers and the capital markets supporting seek copyright and patent protection of assets to encourage investment.

Whether you really believe in a free market depends on where you sit at a particular time. When you have just invented something, you think a free market is great as you try to build a customer base. Interoperability should be the norm. Once you have a significant market share, you think barriers to entry are great, and so do your potential investors. Switching costs should be as high as possible.

The Master of the House still operates his inns and walled gardens. Walled gardens reign supreme with respect to video entertainment. Popular social media sites like Facebook, Snapchat, Twitter, and YouTube are walled gardens. Technologically the Master of the House favors mobile apps at the expense of mobile web browsers; it’s easier to lock customers in a walled garden with an app; an app is a walled garden.

An Internet architecture designed to handle video entertainment bits in the core of the Internet will make it more difficult to achieve net neutrality. CDNs are private networks, outside the scope of the FCC’s Open Network order. They are free to perpetuate and extend the walled gardens that Balkanize the public space with finely diced and chopped intellectual property rights.

Net Neutrality is fashionable, but it also is dangerous. Almost 100 years of experience with the Interstate Commerce Commission, the FCC, and the Civil Aeronautics Board shows that regulatory schemes adopted initially to ensure appropriate conduct in the marketplace also make the coercive power of the government available to legacy defenders of the status quo who use it to stifle innovation and the competition that results from it.

It’s too easy for some heretofore un-appreciated group to claim that it is being underserved, that there is a new “digital divide,” and that resources need to be devoted primarily to making Internet use equitable. In addition, assistant professors seeking tenure and journalists seeking the front page or to lead the evening news are always eager to write stories and articles about how new Internet technologies present a danger to strongly held values. Regulating the Internet like telephone companies provide a well-established channel for these political forces to override economic and engineering decisions.

Security represents another potent threat. Terrorists, cyberstalkers, thieves, spies, and saboteurs use the Internet – like everyone else. Communication networks from the earliest days of telegraph, telephones, and radio, have made the jobs of police and counterintelligence agencies more difficult. The Internet does now. Calls for closer monitoring of Internet traffic, banning certain content, and improving security are nothing new. Each new threat, whether it be organization of terrorist cells, more creative email phishing exploits, or Russian interference in American elections intensifies calls for restrictions on the Internet. The incorporation of the telephone system and public safety two-way radio into the Internet will make it easier for exaggerated concerns about network security to make it harder to use the Internet. Security can always be improved by disconnecting. It can be improved by obscuring usefulness behind layers of guards. The Internet may be insecure, but it is easy to use.

These calls have always had a certain level of resonance with the public, but so far have mostly given way to stronger voices protecting the Internet’s philosophy of openness. Whether that will continue to happen is uncertain, given the weaker commitment to freedom of expression and entrepreneurial latitude in places like China, or even some places in Europe. Things might be different this time around because of the rise of a know-nothing populism around the world.

The law has actually has had very little to do with the Internet’s success. The Internet has been shaped almost entirely by entrepreneurs and engineers. The two most important Internet laws are shields: In 1992, my first law review article on the Internet, based on my participation in the Harvard conference said:

Any legal framework . . . should serve the following three goals: (1) There should be a diversity of information products and services in a competitive marketplace; this means that suppliers must have reasonable autonomy in designing their products; (2) users and organizers of information content should not be foreclosed from access to markets or audiences; and (3) persons suffering legal injury because of information content should be able to obtain compensation from someone if they can prove traditional levels of fault.

It recommended immunizing intermediaries from liability from harmful content as long as they acted like common carriers, not discriminating among content originators—a concept codified in the safe harbor provisions of the Digital Millennium Copyright Act and section 230 of the Communications Decency Act, both of which shield intermediaries from liability for allegedly harmful content sponsored by others. In that article and other early articles and policy papers, I urged a light touch for regulation.

David Johnson, David Post, Ron Plesser, Jack Goldsmith, and I used to argue in the late 1990s about whether the world needed some kind of new some kind of new Internet law or constitution. Goldsmith took the position that existing legal doctrines of tort, contract, and civil procedure were perfectly capable of adapting themselves to new kinds of Internet disputes. He was right.

Law is often criticized for being behind technology. That is not a weakness; it is a strength. For law to be ahead of technology stifles innovation. What is legal depends on guesses lawmakers have made about the most promising directions of technological development. Those guesses are rarely correct. Law should follow technology, because only if it does so will it be able to play its most appropriate role of filling in gaps and correcting the directions of other societal forces that shape behavior: economics, social pressure embedded in the culture, and private lawsuits.

Here is the best sequence: a new technology is developed. A few bold entrepreneurs take it up and build it into their business plans. In some cases it will be successful and spread; most cases it will not. The technologies that spread will impact other economic players. It will threaten to erode their market shares; it will confront them with choosing new technology if they wish to remain viable businesses; it will goad them into seeking innovations in their legacy technologies.

The new technology will probably cause accidents, injuring and killing some of its users and injuring the property and persons of bystanders. Widespread use of the technology also will have adverse effects on other, intangible interests, such as privacy and intellectual property. Those suffering injury will seek compensation from those using the technology and try to get them to stop using it.

Most of these disputes will be resolved privately without recourse to governmental institutions of any kind. Some of them will find their way to court. Lawyers will have little difficulty framing the disputes in terms of well-established rights, duties, privileges, powers, and liabilities. The courts will hear the cases, with lawyers on opposing sides presenting creative arguments as to how the law should be understood in light of the new technology. Judicial decisions will result, carefully explaining where the new technology fits within long-accepted legal principles.

Law professors, journalists, and interest groups will write about the judicial opinions, and, gradually, conflicting views will crystallize as to whether the judge-interpreted law is correct for channeling the technology’s benefits and costs. Eventually, if the matter has sufficient political traction, someone will propose a bill in a city council, state legislature, or the United States Congress. Alternately, an administrative agency will issue a notice of proposed rulemaking, and a debate over codification of legal principles will begin.

This is a protracted, complex, unpredictable process, and that may make it seem undesirable. But it is beneficial, because the kind of interplay that results from a process like this produces good law. It is the only way to test legal ideas thoroughly and assess their fit with the actual costs and benefits of technology as it is actually deployed in a market economy.

A look backwards warrants optimism for the future, despite new or renewed threats. The history of the Internet has always involved argument between those who said it would never take off because people would prefer established ways of doing business. It has always been subjected to various economic and legal attempts to block its use by new competitors. The Master of the House has always lurked. Shrill voices have always warned about its catastrophic social effects. Despite these enemies, it has prevailed and opened up new pathways for human fulfilment. The power of that vision and the experience of that fulfillment will continue to push aside the forces that are afraid of the future.

Henry H. Perritt, Jr. is Professor of Law and Director of the Graduate Program in Financial Services Law at the Chicago-Kent School of Law. A pioneer in Federal information policy, he served on President Clinton’s Transition Team, working on telecommunications issues, and drafted principles for electronic dissemination of public information, which formed the core of the Electronic Freedom of Information Act Amendments adopted by Congress in 1996. During the Ford administration, he served on the White House staff and as deputy under secretary of labor.

Professor Perritt served on the Computer Science and Telecommunications Policy Board of the National Research Council, and on a National Research Council committee on “Global Networks and Local Values.” He was a member of the interprofessional team that evaluated the FBI’s Carnivore system. He is a member of the bars of Virginia (inactive), Pennsylvania (inactive), the District of Columbia, Maryland, Illinois and the United States Supreme Court. He is a published novelist and playwright.

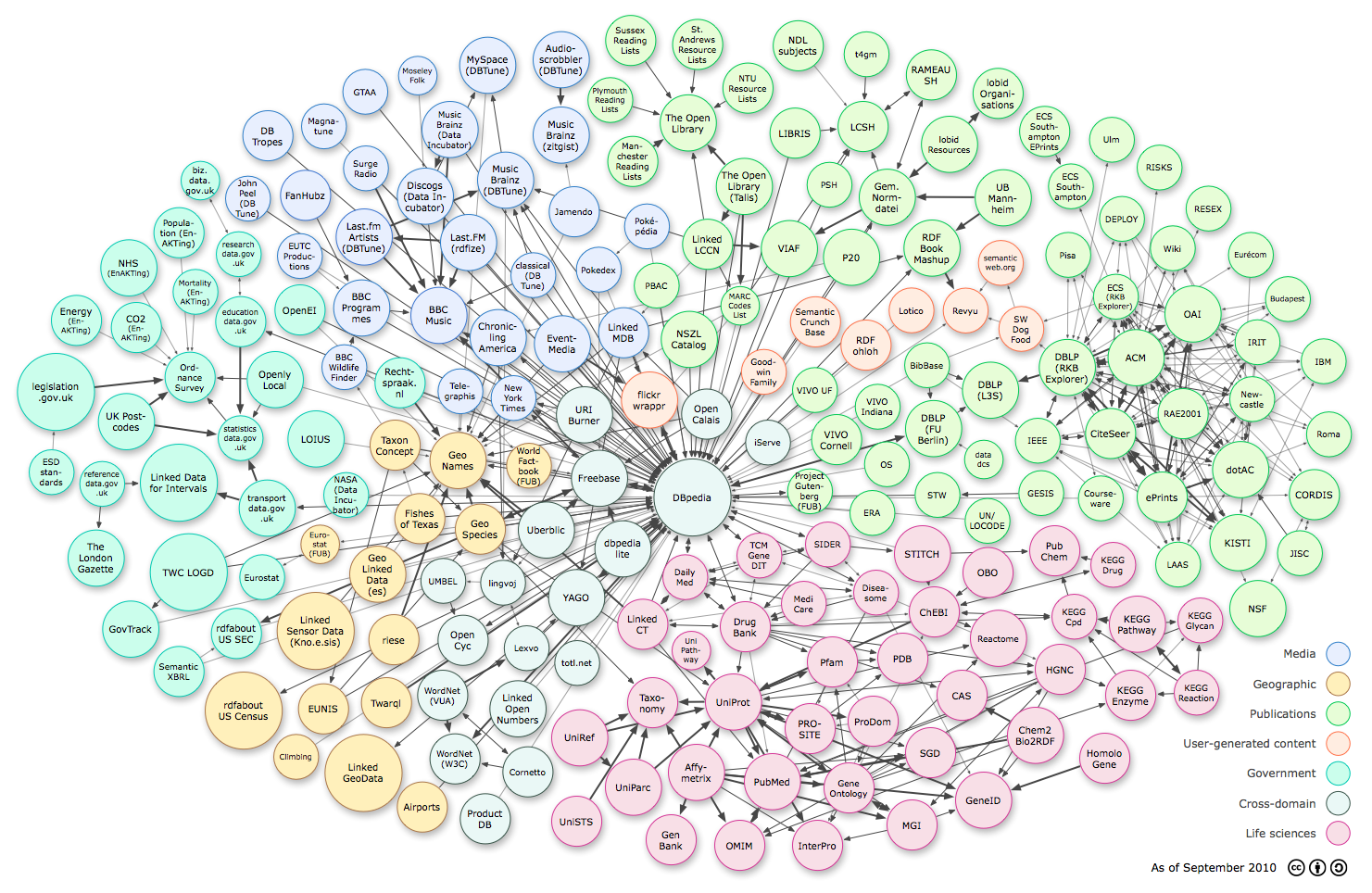

The Linked Open Data (LOD) community has begun to consider quality issues; there are some

The Linked Open Data (LOD) community has begun to consider quality issues; there are some

As a comparative law academic, I have had an interest in legal translation for some time. I’m not alone. In our

As a comparative law academic, I have had an interest in legal translation for some time. I’m not alone. In our  Frank Bennett

Frank Bennett

On the 30th and 31st of October 2008, the 9th International Conference on “

On the 30th and 31st of October 2008, the 9th International Conference on “ Ginevra Peruginelli

Ginevra Peruginelli Enrico Francesconi

Enrico Francesconi