Site tuning as high drama

LII Associate Director Sara Frug is having a bad day. It’s twelve in the afternoon, East Coast time, and the LII web site has slowed to a crawl. It’s taking 16 seconds to get a page of legal information that you could normally get in 6. The LII’s West Coast audience is just getting to the office. Between now and 5 o’clock both East and West Coast people will make heavy use of the site. Things are going from bad to worse, and they will get even worse than that before they get better.

How bad is bad? The typical load average on the LII’s production server is supposed to hover somewhere around 4; that’s what you see on a fully-utilized machine that isn’t straining. Dan Nagy, the LII’s systems administrator, is seeing 39. Dan is not eager to tell you how he knows, but a bad decision made in the course of a tune-up could take it to 300 in about 45 seconds. If that happens, the LII is about as useful as a wet brick. And the LII crew knows: the slow get slower. Anybody who has ever driven on the Boston freeways during the morning rush can see how that works. I-93’s running at capacity, some bozo drops a soda can out the window, and hey-presto, traffic jams solid all the way south to New Bedford.

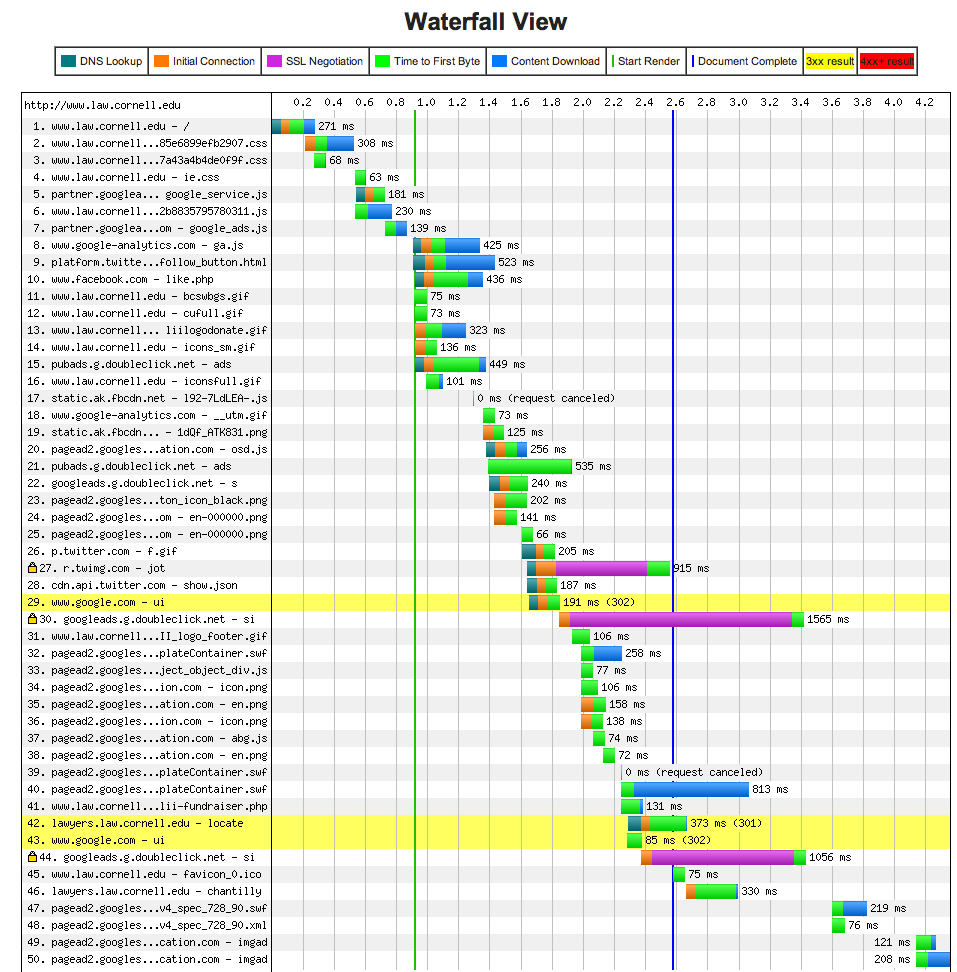

Sara runs the LII’s crew of engineers. Right now, she is talking to Dan and to Wayne Weibel, the LII’s Drupal developer. They’re looking at the output from WebPageTest.org, a free service that gives a lot of web-performance diagnostics (see below). A couple of things are obvious. The server isn’t clearing connections fast enough. They try a couple of quick alterations to the server configuration. The first shoots the load numbers through the roof, and locks up the server. Oops. Definitely not an improvement. A second is successful. Now the server’s clearing connections fast enough to not bog down completely, so the site’s visitors are getting what they want. But it’s still slow. Time to go under the hood.

A single web page at the LII is made up of a lot of pieces. The home page has 65 separate components. Around 20 of them come from the LII, and the remainder are things like that little Facebook logo with the number of “likes” on the page, which has to be fetched from Facebook every time a visitor shows up. Each LII component can involve multiple database queries that look up text, provide additional links to related information, and so on. Every component comes with a “set-up charge” — the cost in time of setting up a connection from the user’s browser so that the component can be retrieved. There are a lot of moving parts. The LII uses a variety of strategies that reduce the need to regenerate pages, either holding preassembled pages in a cache to be served up directly, or telling the user’s browser that it’s OK to hang on to pieces that get used repetitively and not reload them. Either approach cuts down on the work the server has to do. Right now, it’s not enough.

Over the next three days, Sara, Wayne, and Dan will spend a lot of time staring at complex “waterfall” diagrams that give a timeline for the loading of each component that goes into the page. The waterfall also shows them what’s waiting for what; some components need others before they can load. They’ll identify bottlenecks and either eliminate them or find a way to make the component smaller so it takes less time to load. The server will be tweaked so that it doesn’t clog up as quickly. Components will be combined so that they can be loaded from a single file, reducing the number of data connections needed and the setup time involved. They’ll get the average page loading time from 7 seconds down to under 4, even during the afternoon rush. Three seconds doesn’t sound like much, but for users, it’s an eternity. During the rush, it’s critical. An average load time of 7 seconds quickly ramps up to 16 seconds or more, because, well, the slow get slower. An average load time of 4 seconds is stable at 4 seconds. The crew think they can get it down to 3. The Director wants 2. The LII audience wants something instantaneous, or even better, telepathy. Sara and the crew will turn this crisis into an opportunity. Suddenly, everybody’s got a lot of good ideas about how to make things even faster and they’re starting to compete with the clock. The team is drag-racing now.

There’s more to all this than speed. Because the server is now on the right side of the loading curve, it can serve around 150 more simultaneous users during rush hour, when there are typically around 1350 simultaneous visitors on the site. That’s an 11% increase. There are also implications for our donors. Throwing hardware at a problem like this can be expensive. None of the changes they’ve made involved upgrading hardware or buying more computing cycles. That’s a good thing; the next biggest server ( a “double extra large”, in cloud-computing-speak) would cost about twice what we’re paying now.

So, more people in the LII audience getting more legal information twice as fast at no increase in cost. What’s not to like about that?