As a first year law student, a handful of things are given to you (at least where I studied): a pre-fabricated schedule, a non-negotiable slate of professors, and a basic history lesson — illustrated through individual cases. During my first year, the professor I fought with the most was my property law teacher. Now, I realize that it wasn’t her that I couldn’t accept; it was the implications of the worldview she presented. She saw “property law” as a construct through which wealthy people protected their interests at the expense of those who didn’t have the means to defend themselves. Every case — from “fast fox, loose fox” on down — was an example of someone’s manipulating or changing the rules to exclude the poor from fighting for their interests. It was a pretty radical position to accept and I, maybe to my own discredit, ignored it.

As a first year law student, a handful of things are given to you (at least where I studied): a pre-fabricated schedule, a non-negotiable slate of professors, and a basic history lesson — illustrated through individual cases. During my first year, the professor I fought with the most was my property law teacher. Now, I realize that it wasn’t her that I couldn’t accept; it was the implications of the worldview she presented. She saw “property law” as a construct through which wealthy people protected their interests at the expense of those who didn’t have the means to defend themselves. Every case — from “fast fox, loose fox” on down — was an example of someone’s manipulating or changing the rules to exclude the poor from fighting for their interests. It was a pretty radical position to accept and I, maybe to my own discredit, ignored it.

Then, I graduated. I began looking at legal systems around the world and tried to get a sense of how they actually function in practice. I found something a bit startling: they don’t function. Or, at least not for most of us.

Justice: Inaccessible

At first glance, that may seem alarmist. Honestly, it feels a bit radical to say. But, then consider that in 2008, the United Nations issued a report entitled Making the Law Work for Everyone, which estimated that 4 billion people (of a global population of 6 billion at the time) lacked “meaningful” access to the rule of law.

Stop for a second. Read that again. Two-thirds of the world’s population don’t have access to rule-of-law institutions. This means that they lack, not just substantive representation or equal treatment, but even the most basic access to justice.

Now, before you write me, and the UN, off completely as crackpots, I must make some necessary caveats. “Rule-of-law institutions,” in the UN report, means formal, governmentally sponsored systems. The term leaves out pluralistic systems, which rely on adapted or traditional actors, many of which exist exclusively outside of the purview of government, to settle civil or small-scale criminal disputes. Similarly, the word “meaningful,” in this context, is somewhat ambiguous. Making the Law Work for Everyone isn’t clear about what standards it uses to determine what constitutes “access,” “fairness,” or relevant and substantive law (i.e., the number and content of laws). While the report’s major focus was on adapting an appropriate level of formalism in order to create inclusive systems, the strategy of definitionally avoiding pluralism and cultural relativism while assessing a global standard of an internationally (and often constitutionally) protected service significantly complicates the analysis offered in the report.

What’s causing the gap in access to justice?

So, let’s work from the basics. The global population has been rising steadily for, well, a while now, increasing the volume of need addressed by legal systems. Concurrently, the number of countries has grown, and with them young legal systems, often without precedents or established institutional infrastructures. As the number of legal systems has grown, so too have the public’s expectations of the ability of these systems to provide formalized justice procedures. Within each of these nations, trends like urbanization, the emergence of new technologies, and the expansion of regulatory frameworks add complexity to the number of laws each domestic justice system is charged with enforcing. On top of this, the internationalization and proliferation of treaties, trade agreements, and institutions imposes another layer of complexity on what are often already over-burdened enforcement mechanisms. It’s understandable, then, why just about every government in the world struggles, not only to create justice systems that address all of these very complicated issues, but also to administer these systems so that they offer universally equal access and treatment.

Predictably, private industry observed these trends a long time ago. As a result, it should be no surprise that the cost of legal services has been steadily rising for at least 20 years. Law is fairly unique in that it is in charge of creating its own complexity, which is also the basis of its business model. The harder the law is to understand, the more work there is for lawyers. This means fewer people will have the specialized skills and relationships necessary to successfully achieve an outcome through the legal system.

What’s even more confusing is that because clients’ needs and circumstances vary so significantly, it’s very difficult to reliably judge the quality of service a lawyer provides. The result is a market where people, lacking any other reliable indicator, judge by price, aesthetics, and reputation. To a limited extent, this enables lawyers to self-create high-end market demand by inflating prices and, well, wearing really nice suits. (Yes, this is an oversimplification. But they do, often, wear REALLY nice suits). The result is the exclusion (or short-shrifting) of middle- and low-income clients who need the same level of care, but are less concerned with the attire. Incidentally, the size and spending power of the market being excluded — even despite growing wealth inequality — are enormous.

Redesigning legal services

I don’t mean to be simplistic or to re-state widely understood criticisms of legal systems. Instead, I want to establish the foundations for my understanding of things. See, I approach this from a design viewpoint. The two perspectives above — namely, that of governments trying to implement systems, and that of law firms trying to capitalize on available service markets — often neglect the one design perspective that determines success: that of the user. When we’re judging the success of legal systems, we don’t spend nearly enough time thinking about what the average person encounters when trying to engage legal systems. For most people, the accessibility (both physical and intellectual) and procedure of law, are as determinative of participation in the justice system as whether the system meets international standards.

The individuals and organizations on the cutting edge of this thinking, in my understanding, are those tasked with delivering legal services to low-resource and rural populations. Commercial and governmental legal service providers simply haven’t figured out a model that enables them to effectively engage these populations, who are also the world’s largest (relatively) untapped markets. Legal aid providers, however, encounter the individuals who have to overcome barriers like cost, time, education, and distance to just preserve the status quo, as well as those who seek protection. From the perspective of legal aid clients, the biggest challenge to accessing the justice system may be the fact that courts are often located dozens of miles away from clients’ homes, over miserable roads. Or the biggest challenge may be the fact that clients have to appear in court repeatedly to accomplish what seem like small tasks, such as extensions or depositions. Or the biggest challenge may be simply not knowing whom to approach to accomplish their law-related goals. Each of these challenges represents a barrier to access to justice. Each barrier to access, when alleviated, represents an opportunity for engagement and, if done correctly, an opportunity for financial sustainability.

Mobile points the way

None of this is intended as criticism — almost every major service industry in the world grapples with the same challenges. Well, with the exception of at least one: the mobile phone industry. The emergence of mobile phones presents two amazing opportunities for the legal services industry: 1) the very real opportunity for effective engagement with low-income and rural communities; and 2) an example of how, when service offerings are appropriately priced, these communities can represent immensely profitable commercial opportunities.

Let’s begin with a couple of quick points of information. Global mobile penetration (the number of people with active cell phone subscriptions) is approximately 5.3 billion, which is 78 percent of the world’s population. There are two things that every single one of those mobile phone accounts can do: 1) make calls; and 2) send text messages. Text messaging, or SMS (Short Message Service), is particularly interesting in the context of legal services because it is a way to actively engage with a potential client, or a client, immediately, cheaply, and digitally. There are 4.3 billion active SMS users in the world and, in 2010, the world sent 6.1 trillion text messages, a figure that has tripled in the last 3 years and is projected to double again by 2013. That’s more than twice the global Internet population of 2 billion. It’s no exaggeration, at this point, to say that mobile technology is transformative to, basically, everything. What has not been fully explored is why and how mobile devices can transform service delivery in particular settings.

Why is SMS so promising?

Something well-understood in the technology space is the value of approaching people using the platforms that they’re familiar with. In fact, in technology, the thing that matters most is use. Everything has to make sense to a user, and make things easier than they would be if the user didn’t use the system. This thinking largely takes place in technology spaces, in the niche called “user-interface design.” (Forgive the nerdy term, lawyers. Forgive the simplicity, fellow tech nerds.) These are the people who design the way that people engage with a new piece of technology.

In this way, considering it has 4.3 billion users, SMS has been one of the best, and most simply, designed technologies ever. SMS is instant, (usually) cheap, private, digital, standardized, asynchronous (unlike a phone call, people can respond whenever they want), and very easy to use. These benefits have made it the most used digital text-based communication tool in human history.

User-Interface-Design Principles + SMS + Legal Services = ?

So. What happens when you take user-interface design thinking, and apply it to legal systems? Recognizing that the assumptions underlying most formal legal systems arose when those systems originated (most of the time hundreds of years ago), how would we update or change what we do to improve the functioning of legal systems?

There are a lot of good answers to those questions, and moves toward transactional representation, form standardization (à la LegalZoom), legal process outsourcing (à la Pangea3), legal information systems (there are a lot), and process automation (such as document assembly) are all tremendously interesting approaches to this work. Unfortunately, I’m not an expert on any of those.

FrontlineSMS:Legal

I work for an organization called FrontlineSMS, where I also founded our FrontlineSMS:Legal project. What we do, at FrontlineSMS, is design simple pieces of technology that make it easier to use SMS to do complex and professional engagement. The FrontlineSMS:Legal project seeks to capitalize on the benefits of SMS to improve access to justice and the efficiency of legal services. That is, I spend a lot of my time thinking about all the ways in which SMS can be used to provide legal services to more people, more cheaply.

And the good news is, I think, that there are a lot of ways to do this. Pardon me while I geek out on a few.

Intake and referral

The process of remote legal client intake and referral takes a number of forms, depending on the organization, procedural context, and infrastructure. Within most legal processes, the initial interview between a service provider and a client is an exceptionally important and complex interaction. There are, however, often a number of simpler communications that precede and coordinate the initial interview, such as very basic information collection and appointment scheduling, which could be conducted remotely via SMS.

The process of remote legal client intake and referral takes a number of forms, depending on the organization, procedural context, and infrastructure. Within most legal processes, the initial interview between a service provider and a client is an exceptionally important and complex interaction. There are, however, often a number of simpler communications that precede and coordinate the initial interview, such as very basic information collection and appointment scheduling, which could be conducted remotely via SMS.

Given the complexity of legal institutions, providing remote intake and referral can significantly reduce the inefficiencies that so-called “last-mile” populations — i.e., populations who live in “areas …beyond the reach of basic government infrastructure or services — face in seeking access to services. The issue of complexity is often compounded by the centralization of legal service providers in urban areas, which requires potential clients to travel just to begin these processes. Furthermore, most rural or extension services operate with paper records, which are physically transported to central locations at fixed intervals. These records are not particularly practical from a workflow management perspective and often are left unexamined in unwieldy filing systems. FrontlineSMS:Legal can reduce these barriers by creating mobile interfaces for digital intake and referral systems, which enable clients to undertake simple interactions, such as identifying the appropriate service provider and scheduling an appointment.

Client and case management

After intake, most legal processes require service providers to interact with their clients on multiple occasions, in order to gather follow-up information, prepare the case, and manage successive court hearings. Recognizing that each such meetings require people from last-mile communities to travel significant distances, the iterative nature of these processes often imposes a disproportionate burden on clients, given the desired outcome. In addition, many countries struggle to provide sufficient postal or fixed-line telephone services, meaning that organizing follow-up appointments with clients can be a significant challenge. These challenges become considerably more complicated in cases that have multiple elements requiring coordination between both clients and institutions.

Similarly, in order to follow up with clients, service providers must place person-to-person phone calls, which can take significant chunks of time. Moreover, internal case management systems originate from paper records, causing large amounts of duplicative data entry and lags in data availability.

To alleviate these problems, we propose that legal service providers install a FrontlineSMS:Legal hub in a central location, such as a law firm or public defender’s office. During the intake interview, service agents would record the client’s mobile number and use SMS as an ongoing communications platform.

To alleviate these problems, we propose that legal service providers install a FrontlineSMS:Legal hub in a central location, such as a law firm or public defender’s office. During the intake interview, service agents would record the client’s mobile number and use SMS as an ongoing communications platform.

By creating a sustained communications channel between service providers and clients, lawyers and governments could communicate simple information, such as hearing reminders, probation compliance reminders, and simple case details. Additionally, these communications could be automated and sent to entire groups of clients, thereby reducing the amount of time required to manage clients and important case deadlines. This set of tools would reduce the barriers to communication with last-mile clients and create digital records of these interactions, enabling service providers to view all of these exchanges in one easy-to-use interface, reducing duplicative data entry and improving information usability.

Caseload- and service-extension agent management

Although this article focuses largely on innovations that improve direct access to legal services for last-mile populations, the same tools also have the effect of improving internal system efficiency by digitizing records and enabling a data-driven approach to measuring outcomes. Both urban and rural service extension programs have a difficult time monitoring their caseloads and agents in the field. The same communication barriers that limit a service provider’s ability to connect with last-mile clients also prevent communication with remote agents. Mobile interfaces have the effect of lowering these barriers, enabling both intake and remote reporting processes to feed digital interfaces. These digital record systems, when used effectively, inform a manager’s ability to allocate cases to the most available service provider.

Applied to legal processes, supervising attorneys can use the same SMS hubs that administer intake and case management processes to digitize their internal management structures. One central hub, fed by the intake process that information desks often perform, and remote input where service extension agents exist can allow managers to assign cases to individual service providers, and then track them through disposition. In doing so, legal service coordinators will be able to track each employee’s workload in real time. In addition, system administrators will be able to look at the types and frequency of cases they take on, which will inform their ability to allocate resources effectively. If, for example, one area has a dramatically higher number of cases than another, it may make sense to deploy multiple community legal advisors to adequately address the area of greatest need.

Ultimately, though, SMS use in legal services remains largely untested. FrontlineSMS is currently working with several partners to design specific mobile interfaces that meet their needs. These efforts will definitely turn up new and interesting things that can be done using SMS and, particularly, FrontlineSMS. These projects, however, are still largely in the design phase.

In addition to practical implementation challenges, there are a large number of challenges that lie ahead, as we begin to consider the implications of the professional use of SMS. Issues such as security, privacy, identity, and chain of custody will all need to be addressed as systems adapt to include new technologies. There are a number of brilliant minds well ahead on this, and we’ve even jury-rigged a few solutions ourselves, but there will be plenty to learn along the way.

The potential is great

What is clear, though, is that SMS has the potential to improve cost efficiencies, engage new populations, and, for the first time, build a justice system that works for the people who need it most.

I don’t think any of this will square me with my property-law professor. I’m not sure I’ll ever fix property law. But I do think that by reaching out to new populations using the technologies in their pockets, we can make a difference in the way people interact with the law. And even if that’s just a little bit, even if it just enables one percent more people to protect their homes, start a business, or pursue a better life, isn’t that worth it?

[Editor’s Note: For other VoxPopuLII posts on using technology to improve access to justice, please see Judge Dory Reiling, IT and the Access-to-Justice Crisis; Nick Holmes, Accessible Law; and Christine Kirchberger, If the mountain will not come to the prophet, the prophet will go to the mountain.]

Sean Martin McDonald is the Director of Operations at FrontlineSMS and the founding Director of FrontlineSMS:Legal. He holds JD and MA degrees from American University. He is the author, most recently, of The Case for mLegal.

Sean Martin McDonald is the Director of Operations at FrontlineSMS and the founding Director of FrontlineSMS:Legal. He holds JD and MA degrees from American University. He is the author, most recently, of The Case for mLegal.

VoxPopuLII is edited by Judith Pratt. Editor-in-Chief is Robert Richards, to whom queries should be directed. The statements above are not legal advice or legal representation. If you require legal advice, consult a lawyer. Find a lawyer in the Cornell LII Lawyer Directory.

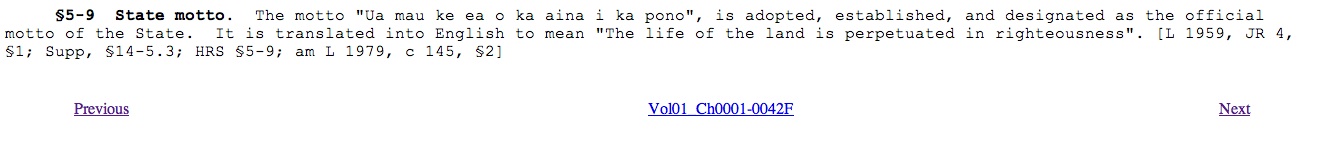

The least-painful path at present is to visit

The least-painful path at present is to visit